At 10.5 and later releases, ArcGIS Enterprise introduces ArcGIS GeoAnalytics Server which provides you the ability to perform big data analysis on your infrastructure. This sample demonstrates the steps involved in performing an aggregation analysis on New York city taxi point data using ArcGIS API for Python.

The data used in this sample can be downloaded from NYC Taxi & Limousine Commission website. For this sample, data for the months January & Febuary of 2015 were used, each averaging 12 million records.

Note: The ability to perform big data analysis is only available on ArcGIS Enterprise 10.5 licensed with a GeoAnalytics server and not yet available on ArcGIS Online.

The NYC taxi data

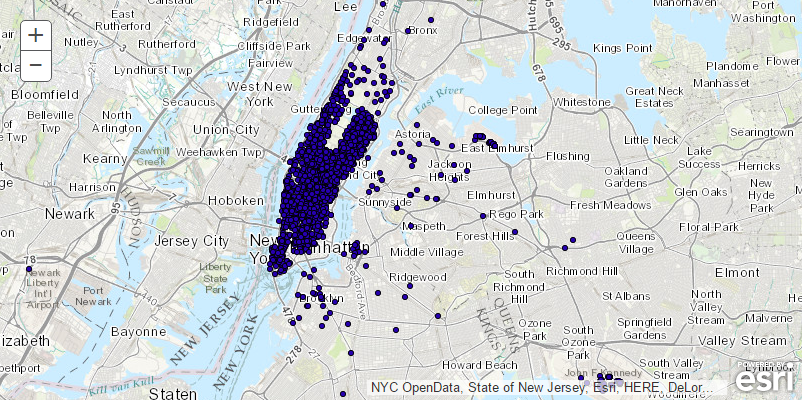

To give you an overview, let us take a look at a subset with 2000 points published as a feature service.

import arcgis

from arcgis.gis import GIS

ago_gis = GIS() # Connect to ArcGIS Online as an anonymous user

search_subset = ago_gis.content.search("NYC_taxi_subset", item_type = "Feature Layer")

subset_item = search_subset[0]

subset_itemLet us bring up a map to display the data.

subset_map = ago_gis.map("New York, NY", zoomlevel=11)

subset_map

subset_map.add_layer(subset_item)Let us access the feature layers using the layers property. We can select a specific layer from the laters list and explore its attribute table to understand the structure of our data. In the cell below, we use the feature layer's query() method to return the layer attribute information. The query() method returns a FeatureSet object, which is a collection of individual Feature objects.

You can mine through the FeatureSet to inspect each individual Feature, read its attribute information and then compose a table of all features and their attributes. However, the FeatureSet object provides a much easier and more direct way to get that information. Using the df property of a FeatureSet, you can load the attribute information as a pandas dataframe object.

If you installed the ArcGIS API for Python through ArcGIS Pro or with the conda install command, you have the api and its dependencies, including the pandas package. The df property will return a dataframe. If you installed without dependences, you need to install the pandas Python package for the df property to return a dataframe. If you get an error that pandas cannot be found, you can install it by typing the following in your terminal that is running the jupyter notebook:

conda install pandas

subset_feature_layer = subset_item.layers[0]

# query the attribute information. Limit to first 5 rows.

query_result = subset_feature_layer.query(where = 'OBJECTID < 5',

out_fields = "*",

returnGeometry = False)

att_data_frame = query_result.sdf # get as a Pandas dataframe

att_data_frame| Field1 | OBJECTID | RateCodeID | VendorID | dropoff_latitude | dropoff_longitude | extra | fare_amount | improvement_surcharge | mta_tax | ... | payment_type | pickup_latitude | pickup_longitude | store_and_fwd_flag | tip_amount | tolls_amount | total_amount | tpep_dropoff_datetime | tpep_pickup_datetime | trip_distance | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3479320 | 1 | 1 | 2 | 40.782318 | -73.980492 | 0.0 | 9.5 | 0.3 | 0.5 | ... | 1 | 40.778149 | -73.956291 | N | 2.1 | 0 | 12.4 | 1970-01-01 00:23:42.268943 | 1970-01-01 00:23:42.268218 | 1.76 |

| 1 | 8473342 | 2 | 1 | 2 | 40.769756 | -73.950600 | 0.5 | 13.5 | 0.3 | 0.5 | ... | 2 | 40.729458 | -73.983864 | N | 0.0 | 0 | 14.8 | 1970-01-01 00:23:42.137577 | 1970-01-01 00:23:42.136892 | 3.73 |

| 2 | 10864374 | 3 | 1 | 2 | 40.753040 | -73.985680 | 0.0 | 14.5 | 0.3 | 0.5 | ... | 2 | 40.743740 | -73.987617 | N | 0.0 | 0 | 15.3 | 1970-01-01 00:23:42.719906 | 1970-01-01 00:23:42.718711 | 2.84 |

| 3 | 7350094 | 4 | 1 | 2 | 40.765743 | -73.954994 | 0.0 | 11.5 | 0.3 | 0.5 | ... | 2 | 40.757507 | -73.981682 | N | 0.0 | 0 | 12.3 | 1970-01-01 00:23:40.907558 | 1970-01-01 00:23:40.906601 | 2.18 |

4 rows × 21 columns

The table above represents the attribute information available from the NYC dataset. Columns provide a wealth of infomation such as pickup and dropoff_locations, fares, tips, tolls, and trip distances which you can analyze to observe many interesting patterns. The full data dataset contains over 24 million points. To discern patterns out of it, let us aggregate the points into blocks of 1 square kilometer.

Searching for big data file shares

To process data using GeoAnalytics Server, you need to have registered the data with your Geoanalytics Server. In this sample the data is in multiple csv files, which have been previously registered as a big data file share.

Let us connect to an ArcGIS Enterprise.

gis = GIS('https://pythonapi.playground.esri.com/portal', 'arcgis_python', 'amazing_arcgis_123')Ensure that the Geoanalytics is supported with our GIS.

arcgis.geoanalytics.is_supported()True

Get the geoanalytics datastores and search it for the registered datasets:

datastores = arcgis.geoanalytics.get_datastores()bigdata_fileshares = datastores.search(id='0e7a861d-c1c5-4acc-869d-05d2cebbdbee')

bigdata_fileshares[<Datastore title:"/bigDataFileShares/GA_Data" type:"bigDataFileShare">]

GA_Data is registered as a big data file share with the Geoanalytics datastore, so we can reference it:

data_item = bigdata_fileshares[0]Registering big data file shares

The code below shows how a big data file share can be registered with the geoanalytics datastores, in case it's not already registered.

# data_item = datastores.add_bigdata("NYCdata", r"\\pathway\to\data")Big Data file share exists for NYCdata

Once a big data file share is created, the GeoAnalytics server processes all the valid file types to discern the schema of the data. This process can take a few minutes depending on the size of your data. Once processed, querying the manifest property returns the schema. As you can see from below, the schema is similar to the subset we observed earlier in this sample.

data_item.manifest{'datasets': [{'name': 'air_quality',

'format': {'quoteChar': '"',

'fieldDelimiter': ',',

'hasHeaderRow': True,

'encoding': 'UTF-8',

'escapeChar': '"',

'recordTerminator': '\n',

'type': 'delimited',

'extension': 'csv'},

'schema': {'fields': [{'name': 'State Code',

'type': 'esriFieldTypeBigInteger'},

{'name': 'County Code', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Site Num', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Parameter Code', 'type': 'esriFieldTypeBigInteger'},

{'name': 'POC', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Latitude', 'type': 'esriFieldTypeDouble'},

{'name': 'Longitude', 'type': 'esriFieldTypeDouble'},

{'name': 'Datum', 'type': 'esriFieldTypeString'},

{'name': 'Parameter Name', 'type': 'esriFieldTypeString'},

{'name': 'Date Local', 'type': 'esriFieldTypeString'},

{'name': 'Time Local', 'type': 'esriFieldTypeString'},

{'name': 'Date GMT', 'type': 'esriFieldTypeString'},

{'name': 'Time GMT', 'type': 'esriFieldTypeString'},

{'name': 'Sample Measurement', 'type': 'esriFieldTypeDouble'},

{'name': 'Units of Measure', 'type': 'esriFieldTypeString'},

{'name': 'MDL', 'type': 'esriFieldTypeDouble'},

{'name': 'Uncertainty', 'type': 'esriFieldTypeString'},

{'name': 'Qualifier', 'type': 'esriFieldTypeString'},

{'name': 'Method Type', 'type': 'esriFieldTypeString'},

{'name': 'Method Code', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Method Name', 'type': 'esriFieldTypeString'},

{'name': 'State Name', 'type': 'esriFieldTypeString'},

{'name': 'County Name', 'type': 'esriFieldTypeString'},

{'name': 'Date of Last Change', 'type': 'esriFieldTypeString'}]},

'geometry': {'geometryType': 'esriGeometryPoint',

'spatialReference': {'wkid': 4326},

'fields': [{'name': 'Longitude', 'formats': ['x']},

{'name': 'Latitude', 'formats': ['y']}]},

'time': {'timeType': 'instant',

'timeReference': {'timeZone': 'UTC'},

'fields': [{'name': 'Date Local', 'formats': ['yyyy-MM-dd']}]}},

{'name': 'crime',

'format': {'quoteChar': '"',

'fieldDelimiter': ',',

'hasHeaderRow': True,

'encoding': 'UTF-8',

'escapeChar': '"',

'recordTerminator': '\n',

'type': 'delimited',

'extension': 'csv'},

'schema': {'fields': [{'name': 'ID', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Case Number', 'type': 'esriFieldTypeString'},

{'name': 'Date', 'type': 'esriFieldTypeString'},

{'name': 'Block', 'type': 'esriFieldTypeString'},

{'name': 'IUCR', 'type': 'esriFieldTypeString'},

{'name': 'Primary Type', 'type': 'esriFieldTypeString'},

{'name': 'Description', 'type': 'esriFieldTypeString'},

{'name': 'Location Description', 'type': 'esriFieldTypeString'},

{'name': 'Arrest', 'type': 'esriFieldTypeString'},

{'name': 'Domestic', 'type': 'esriFieldTypeString'},

{'name': 'Beat', 'type': 'esriFieldTypeBigInteger'},

{'name': 'District', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Ward', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Community Area', 'type': 'esriFieldTypeBigInteger'},

{'name': 'FBI Code', 'type': 'esriFieldTypeString'},

{'name': 'X Coordinate', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Y Coordinate', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Year', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Updated On', 'type': 'esriFieldTypeString'},

{'name': 'Latitude', 'type': 'esriFieldTypeDouble'},

{'name': 'Longitude', 'type': 'esriFieldTypeDouble'},

{'name': 'Location', 'type': 'esriFieldTypeString'}]},

'geometry': {'geometryType': 'esriGeometryPoint',

'spatialReference': {'wkid': 4326},

'fields': [{'name': 'Location', 'formats': ['({y},{x})']}]},

'time': {'timeType': 'instant',

'timeReference': {'timeZone': 'UTC'},

'fields': [{'name': 'Date', 'formats': ['MM/dd/yyyy hh:mm:ss a']}]}},

{'name': 'calls',

'format': {'quoteChar': '"',

'fieldDelimiter': ',',

'hasHeaderRow': True,

'encoding': 'UTF-8',

'escapeChar': '"',

'recordTerminator': '\n',

'type': 'delimited',

'extension': 'csv'},

'schema': {'fields': [{'name': 'NOPD_Item', 'type': 'esriFieldTypeString'},

{'name': 'Type_', 'type': 'esriFieldTypeString'},

{'name': 'TypeText', 'type': 'esriFieldTypeString'},

{'name': 'Priority', 'type': 'esriFieldTypeString'},

{'name': 'MapX', 'type': 'esriFieldTypeDouble'},

{'name': 'MapY', 'type': 'esriFieldTypeDouble'},

{'name': 'TimeCreate', 'type': 'esriFieldTypeString'},

{'name': 'TimeDispatch', 'type': 'esriFieldTypeString'},

{'name': 'TimeArrive', 'type': 'esriFieldTypeString'},

{'name': 'TimeClosed', 'type': 'esriFieldTypeString'},

{'name': 'Disposition', 'type': 'esriFieldTypeString'},

{'name': 'DispositionText', 'type': 'esriFieldTypeString'},

{'name': 'BLOCK_ADDRESS', 'type': 'esriFieldTypeString'},

{'name': 'Zip', 'type': 'esriFieldTypeBigInteger'},

{'name': 'PoliceDistrict', 'type': 'esriFieldTypeBigInteger'},

{'name': 'Location', 'type': 'esriFieldTypeString'}]},

'time': {'timeType': 'instant',

'timeReference': {'timeZone': 'UTC'},

'fields': [{'name': 'TimeDispatch', 'formats': ['epoch_millis']}]}},

{'name': 'analyze_new_york_city_taxi_data',

'format': {'quoteChar': '"',

'fieldDelimiter': ',',

'hasHeaderRow': True,

'encoding': 'UTF-8',

'escapeChar': '"',

'recordTerminator': '\n',

'type': 'delimited',

'extension': 'csv'},

'schema': {'fields': [{'name': 'VendorID',

'type': 'esriFieldTypeBigInteger'},

{'name': 'tpep_pickup_datetime', 'type': 'esriFieldTypeString'},

{'name': 'tpep_dropoff_datetime', 'type': 'esriFieldTypeString'},

{'name': 'passenger_count', 'type': 'esriFieldTypeBigInteger'},

{'name': 'trip_distance', 'type': 'esriFieldTypeDouble'},

{'name': 'pickup_longitude', 'type': 'esriFieldTypeDouble'},

{'name': 'pickup_latitude', 'type': 'esriFieldTypeDouble'},

{'name': 'RateCodeID', 'type': 'esriFieldTypeBigInteger'},

{'name': 'store_and_fwd_flag', 'type': 'esriFieldTypeString'},

{'name': 'dropoff_longitude', 'type': 'esriFieldTypeDouble'},

{'name': 'dropoff_latitude', 'type': 'esriFieldTypeDouble'},

{'name': 'payment_type', 'type': 'esriFieldTypeBigInteger'},

{'name': 'fare_amount', 'type': 'esriFieldTypeDouble'},

{'name': 'extra', 'type': 'esriFieldTypeDouble'},

{'name': 'mta_tax', 'type': 'esriFieldTypeDouble'},

{'name': 'tip_amount', 'type': 'esriFieldTypeDouble'},

{'name': 'tolls_amount', 'type': 'esriFieldTypeDouble'},

{'name': 'improvement_surcharge', 'type': 'esriFieldTypeDouble'},

{'name': 'total_amount', 'type': 'esriFieldTypeDouble'}]},

'geometry': {'geometryType': 'esriGeometryPoint',

'spatialReference': {'wkid': 4326},

'fields': [{'name': 'pickup_longitude', 'formats': ['x']},

{'name': 'pickup_latitude', 'formats': ['y']}]},

'time': {'timeType': 'instant',

'timeReference': {'timeZone': 'UTC'},

'fields': [{'name': 'tpep_pickup_datetime',

'formats': ['yyyy-MM-dd HH:mm:ss']}]}}]}Since this big data file share has multiple datasets, let's check the manifest for the taxi dataset.

data_item.manifest['datasets'][3]{'name': 'analyze_new_york_city_taxi_data',

'format': {'quoteChar': '"',

'fieldDelimiter': ',',

'hasHeaderRow': True,

'encoding': 'UTF-8',

'escapeChar': '"',

'recordTerminator': '\n',

'type': 'delimited',

'extension': 'csv'},

'schema': {'fields': [{'name': 'VendorID', 'type': 'esriFieldTypeBigInteger'},

{'name': 'tpep_pickup_datetime', 'type': 'esriFieldTypeString'},

{'name': 'tpep_dropoff_datetime', 'type': 'esriFieldTypeString'},

{'name': 'passenger_count', 'type': 'esriFieldTypeBigInteger'},

{'name': 'trip_distance', 'type': 'esriFieldTypeDouble'},

{'name': 'pickup_longitude', 'type': 'esriFieldTypeDouble'},

{'name': 'pickup_latitude', 'type': 'esriFieldTypeDouble'},

{'name': 'RateCodeID', 'type': 'esriFieldTypeBigInteger'},

{'name': 'store_and_fwd_flag', 'type': 'esriFieldTypeString'},

{'name': 'dropoff_longitude', 'type': 'esriFieldTypeDouble'},

{'name': 'dropoff_latitude', 'type': 'esriFieldTypeDouble'},

{'name': 'payment_type', 'type': 'esriFieldTypeBigInteger'},

{'name': 'fare_amount', 'type': 'esriFieldTypeDouble'},

{'name': 'extra', 'type': 'esriFieldTypeDouble'},

{'name': 'mta_tax', 'type': 'esriFieldTypeDouble'},

{'name': 'tip_amount', 'type': 'esriFieldTypeDouble'},

{'name': 'tolls_amount', 'type': 'esriFieldTypeDouble'},

{'name': 'improvement_surcharge', 'type': 'esriFieldTypeDouble'},

{'name': 'total_amount', 'type': 'esriFieldTypeDouble'}]},

'geometry': {'geometryType': 'esriGeometryPoint',

'spatialReference': {'wkid': 4326},

'fields': [{'name': 'pickup_longitude', 'formats': ['x']},

{'name': 'pickup_latitude', 'formats': ['y']}]},

'time': {'timeType': 'instant',

'timeReference': {'timeZone': 'UTC'},

'fields': [{'name': 'tpep_pickup_datetime',

'formats': ['yyyy-MM-dd HH:mm:ss']}]}}Performing data aggregation

When you add a big data file share datastore, a corresponding item gets created on your portal. You can search for it like a regular item and query its layers.

search_result = gis.content.search("bigDataFileShares_GA_Data", item_type = "big data file share")

search_result[<Item title:"bigDataFileShares_GA_Data" type:Big Data File Share owner:arcgis_python>]

data_item = search_result[0]

data_itemdata_item.layers[<Layer url:"https://pythonapi.playground.esri.com/ga/rest/services/DataStoreCatalogs/bigDataFileShares_GA_Data/BigDataCatalogServer/air_quality">, <Layer url:"https://pythonapi.playground.esri.com/ga/rest/services/DataStoreCatalogs/bigDataFileShares_GA_Data/BigDataCatalogServer/crime">, <Layer url:"https://pythonapi.playground.esri.com/ga/rest/services/DataStoreCatalogs/bigDataFileShares_GA_Data/BigDataCatalogServer/calls">, <Layer url:"https://pythonapi.playground.esri.com/ga/rest/services/DataStoreCatalogs/bigDataFileShares_GA_Data/BigDataCatalogServer/analyze_new_york_city_taxi_data">]

year_2015 = data_item.layers[3]

year_2015<Layer url:"https://pythonapi.playground.esri.com/ga/rest/services/DataStoreCatalogs/bigDataFileShares_GA_Data/BigDataCatalogServer/analyze_new_york_city_taxi_data">

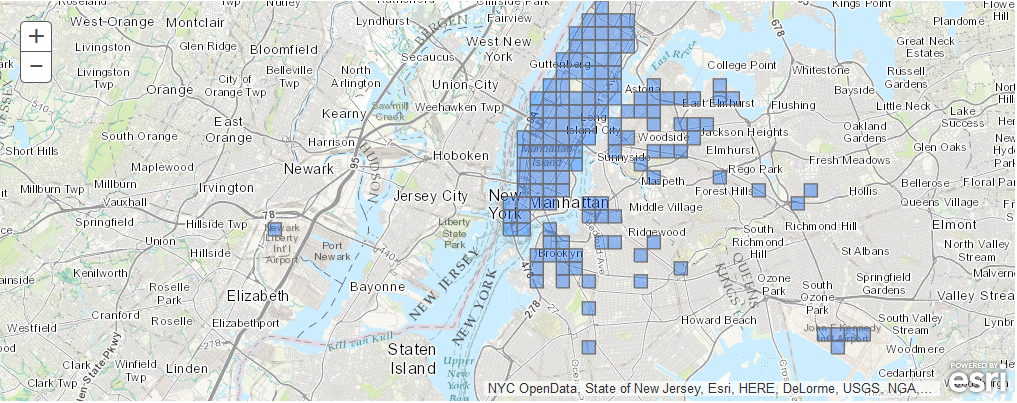

Aggregate points tool

You access the aggregate_points() tool in the summarize_data submodule of the geoanalytics module. In this example, we are using this tool to aggregate the numerous points into 1 kilometer square blocks. The tool creates a polygon feature layer in which each polygon contains aggregated attribute information from all the points in the input dataset that fall within that polygon. The output feature layer contains only polygons that contain at least one point from the input dataset. See Aggregate Points for details on using this tool.

from arcgis.geoanalytics.summarize_data import aggregate_pointsThe aggregate points tool requires that either:

- the point layer is projected, or

- the output or processing coordinate system is set to a Projected Coordinate System

We can query the layer properties to investigate the coordinate system of the point layer:

year_2015.properties['spatialReference']{'wkid': 4326}Since WGS84 (the coordinate system referred to by wkid 4326) is unprojected, we can use the arcgis.env module to set the environment used in the tool processing. The process_spatial_reference environment setting controls the geometry processing of tools used by the API for Python. We can set this parameter to a projected coordinate system for tool processing:

NOTE: Aggregate Points requires that your area layer is in a projected coordinate system. See the

Usage notessection of thehelpfor more information.

arcgis.env.process_spatial_reference=3857We can use the arcgis.env module to modify environment settings that geoprocessing and geoanalytics tools use during execution. Set verbose to True to return detailed messaging when running tools:

arcgis.env.verbose = TrueLet's run the tool, specifying 1 kilometer squares as the polygons for which we want to aggregate information about all the NYC taxi information in each of those polygons:

agg_result = aggregate_points(year_2015,

bin_type='square',

bin_size=1,

polygon_layer='',

bin_size_unit='Kilometers')Submitted.

Executing...

Executing (AggregatePoints): AggregatePoints "Feature Set" Square 1 Kilometers # # # # # # # "{"serviceProperties": {"name": "Aggregate_Points_Analysis_S22I46", "serviceUrl": "https://pythonapi.playground.esri.com/server/rest/services/Hosted/Aggregate_Points_Analysis_S22I46/FeatureServer"}, "itemProperties": {"itemId": "b3b25331e78d4da487da7e38e208655d"}}" "{"defaultAggregationStyles": false, "processSR": {"wkid": 3857}}"

Start Time: Thu May 27 04:31:18 2021

Using URL based GPRecordSet param: https://pythonapi.playground.esri.com/ga/rest/services/DataStoreCatalogs/bigDataFileShares_GA_Data/BigDataCatalogServer/analyze_new_york_city_taxi_data

{"messageCode":"BD_101033","message":"'pointLayer' will be projected into the processing spatial reference.","params":{"paramName":"pointLayer"}}

{"messageCode":"BD_101028","message":"Starting new distributed job with 280 tasks.","params":{"totalTasks":"280"}}

{"messageCode":"BD_101029","message":"0/280 distributed tasks completed.","params":{"completedTasks":"0","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"1/280 distributed tasks completed.","params":{"completedTasks":"1","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"13/280 distributed tasks completed.","params":{"completedTasks":"13","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"25/280 distributed tasks completed.","params":{"completedTasks":"25","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"33/280 distributed tasks completed.","params":{"completedTasks":"33","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"43/280 distributed tasks completed.","params":{"completedTasks":"43","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"53/280 distributed tasks completed.","params":{"completedTasks":"53","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"61/280 distributed tasks completed.","params":{"completedTasks":"61","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"71/280 distributed tasks completed.","params":{"completedTasks":"71","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"79/280 distributed tasks completed.","params":{"completedTasks":"79","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"90/280 distributed tasks completed.","params":{"completedTasks":"90","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"99/280 distributed tasks completed.","params":{"completedTasks":"99","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"109/280 distributed tasks completed.","params":{"completedTasks":"109","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"119/280 distributed tasks completed.","params":{"completedTasks":"119","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"127/280 distributed tasks completed.","params":{"completedTasks":"127","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"134/280 distributed tasks completed.","params":{"completedTasks":"134","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"162/280 distributed tasks completed.","params":{"completedTasks":"162","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"268/280 distributed tasks completed.","params":{"completedTasks":"268","totalTasks":"280"}}

{"messageCode":"BD_101029","message":"280/280 distributed tasks completed.","params":{"completedTasks":"280","totalTasks":"280"}}

{"messageCode":"BD_101081","message":"Finished writing results:"}

{"messageCode":"BD_101082","message":"* Count of features = 4855","params":{"resultCount":"4855"}}

{"messageCode":"BD_101083","message":"* Spatial extent = {\"xmin\":-140.5863419647051,\"ymin\":-27.777893572271292,\"xmax\":78.66546943034649,\"ymax\":82.49801821869467}","params":{"extent":"{\"xmin\":-140.5863419647051,\"ymin\":-27.777893572271292,\"xmax\":78.66546943034649,\"ymax\":82.49801821869467}"}}

{"messageCode":"BD_101084","message":"* Temporal extent = None","params":{"extent":"None"}}

{"messageCode":"BD_101226","message":"Feature service layer created: https://pythonapi.playground.esri.com/server/rest/services/Hosted/Aggregate_Points_Analysis_S22I46/FeatureServer/0","params":{"serviceUrl":"https://pythonapi.playground.esri.com/server/rest/services/Hosted/Aggregate_Points_Analysis_S22I46/FeatureServer/0"}}

{"messageCode":"BD_101054","message":"Some records have either missing or invalid geometries."}

{"messageCode":"BD_101054","message":"Some records have either missing or invalid geometries."}

Succeeded at Thu May 27 04:33:07 2021 (Elapsed Time: 1 minutes 49 seconds)

AggregatePoints GP Job: j7fb3186c1a174df5abd5fb1a806c0e76 finished successfully.

Inspect the results

Let us create a map and load the processed result which is a feature layer item.

processed_map = gis.map('New York, NY', 11)

processed_map

processed_map.add_layer(agg_result)By default the item we just created is not shared, so additinal processing requires login credentials. Let's [share()] the item to avoid this.

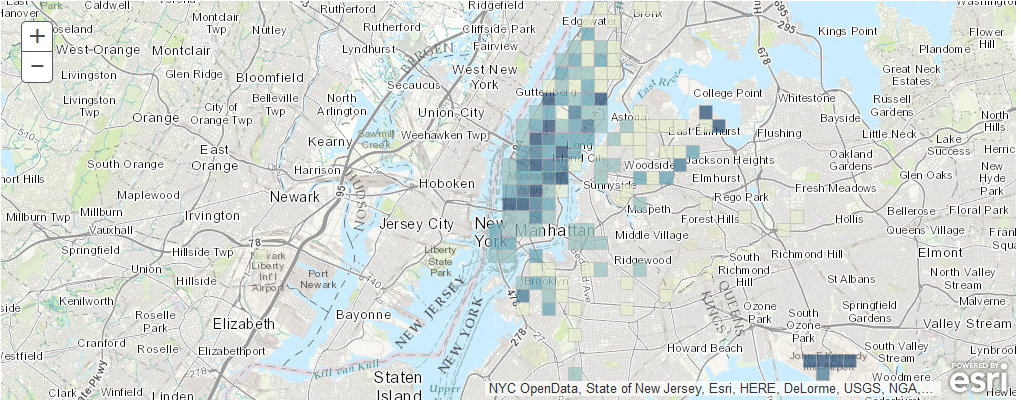

agg_result.share(org=True){'notSharedWith': [], 'itemId': '5cf0d7a3676f496fbcb9f9d460242583'}Let us inspect the analysis result using smart mapping. To learn more about this visualization capability, refer to the guide on Smart Mapping under the 'Mapping and Visualization' section.

map2 = gis.map("New York, NY", 11)

map2

map2.add_layer(agg_result, {

"renderer":"ClassedColorRenderer",

"field_name":"MAX_tip_amount",

"normalizationField":'MAX_trip_distance',

"classificationMethod":'natural-breaks',

"opacity":0.75

})We can now start seeing patterns, such as which pickup areas resulted in higher tips for the cab drivers.