In the previous notebook, we learned how to be more productive with Pandas by using sophisticated multi-level indexing, aggregating and combining data. In this notebook, we will explore the capabilities for working with Time Series data. We will start with the default datetime object in Python and then jump to data structures for working with time series data in Pandas. Let's dive into the details.

# Import libraries

import pandas as pd

import numpy as npTime Series are one of the most common types of structured data that we encounter in daily life. Stock prices, weather data, energy usage, and even digital health, are all examples of data that can be collected at different time intervals. Pandas was developed in the context of financial modeling, so it contains an extensive set of tools for working with dates, times, and time-indexed data. Date and Time data comes in various flavors such as:

- Timestamps: for specific instants in time such as November 5th, 2020 at 7:00am

- Fixed periods: such as the month November 2010 or the full year 2020

- Intervals of time: length of time between a particular beginning and end point

- Time deltas: reference an exact length of time such as a duration of 30.5 seconds

In this notebook, we will briefly introduce date and time data types in native python and then focus on how to work with date/time data in Pandas.

Date and Time in Python

Python's basic objects for working with time series data reside in the datetime module. Let's look at some examples.

# Import datetime

from datetime import datetimeBuilding datetime object

datetime objects can be used to quickly perform a host of useful functionalities. A date can be built in various ways and then properties of a datetime object can be used to get specific date and time details from it.

# Build a date

now = datetime.now()

nowdatetime.datetime(2020, 11, 25, 13, 23, 22, 106620)

datetime.now() creates a datetime object with current date and time down to the microsecond.

# Get date and time details from current date

print('Year: ', now.year)

print('Month: ', now.month)

print('Day: ', now.day)

print('Hour: ', now.hour)

print('Minutes: ', now.minute)

print('Seconds: ', now.second)

print('Microsecond: ', now.microsecond)Year: 2020 Month: 11 Day: 25 Hour: 13 Minutes: 23 Seconds: 22 Microsecond: 106620

A datetime object can also be created by specifying year, month, day, and other details.

datetime(year=2020, month=9, day=10)datetime.datetime(2020, 9, 10, 0, 0)

datetime(year=2020, month=9, day=10, hour=11, minute=30)datetime.datetime(2020, 9, 10, 11, 30)

Converting between String and DateTime

strftime and strptime methods can be used to format datetime objects and pandas Timestamp objects (discussed later in this section).

strftime- convert object to a string according to a given formatstrptime- parse a string into adatetimeobject given a corresponding format

Using strftime and strptime

strftime can be used to convert a datetime object to a string according to a given format. Standard string format codes for printing dates can read about in the strftime section of Python's datetime documentation.

# print data

nowdatetime.datetime(2020, 11, 25, 13, 23, 22, 106620)

# apply strftime

print(now.strftime('%Y-%m-%d'))

print(now.strftime('%F'))

print(now.strftime('%D'))

print(now.strftime('%B %d, %Y'))

print(now.strftime('%d %b, %Y'))

print(now.strftime('%A'))2020-11-25 2020-11-25 11/25/20 November 25, 2020 25 Nov, 2020 Wednesday

# Check type

print(type(now.strftime('%Y-%m-%d')))<class 'str'>

Similarly, strptime can be used to parse a string into a datetime object.

xmas_day = '2020-12-25'

datetime.strptime(xmas_day, '%Y-%m-%d')datetime.datetime(2020, 12, 25, 0, 0)

random_day = '20200422T203448'

datetime.strptime(random_day, '%Y%m%dT%H%M%S')datetime.datetime(2020, 4, 22, 20, 34, 48)

While datetime.strptime is a good way to parse a date when a format is known, it can be annoying to write a format each time.

Using parser.parse

dateutil module provides the parser.parse method that can parse dates from a variety of string formats.

# Import parse

from dateutil.parser import parse# Parse various strings

xmas_day = '2020-12-25'

ind_day = '4th of July, 2015'

random_day = 'Nov 05, 2020 10:45 PM'

random_day2 = '20200422T203448'

print(parse(xmas_day))

print(parse(ind_day))

print(parse(random_day))

print(parse(random_day2))2020-12-25 00:00:00 2015-07-04 00:00:00 2020-11-05 22:45:00 2020-04-22 20:34:48

# Check type

print(type(parse(xmas_day)))<class 'datetime.datetime'>

Date and Time in Pandas

Pandas provides the following fundamental data structures for working with time series data:

Timestamptype for working with time stamps. A replacement for Python's nativedatetime, it is based on the more efficient numpy.datetime64 data type. The associated Index structure isDatetimeIndexPeriodtype for working with time Periods. The associated index structure isPeriodIndexTimedeltatype for working with time deltas or durations. The associated index structure isTimedeltaIndex

The Basics

Pandas provides a Timestamp object, which combines the ease of datetime and dateutil with the efficient storage of numpy.datetime64. The to_datetime method parses many different kinds of date representations returning a Timestamp object.

Passing a single date to to_datetime returns a Timestamp.

# Create timestamp object

ind_day = pd.to_datetime('4th of July, 2020')

ind_dayTimestamp('2020-07-04 00:00:00')strftime can be used to convert this object to a string according to a given format.

# Get full name of Month using strftime

ind_day.strftime('%B')'July'

DatetimeIndex

Passing a series of dates by default returns a DatetimeIndex which can be used to index data in a Series or DataFrame.

dates = pd.to_datetime([datetime(2020, 12, 25), '4th of July, 2020',

'2018-Oct-6', '07-07-2017', '20200508', '20200422T203448'])

datesDatetimeIndex(['2020-12-25 00:00:00', '2020-07-04 00:00:00',

'2018-10-06 00:00:00', '2017-07-07 00:00:00',

'2020-05-08 00:00:00', '2020-04-22 20:34:48'],

dtype='datetime64[ns]', freq=None)DatetimeIndex objects do not have a frequency (hourly, daily, monthly etc.) by default, as they are just snapshots in time. As a result, arithmetic operations such as addition, subtraction, or multiplication cannot be performed directly.

Pandas also supports converting integer or float epoch times to Timestamp and DatetimeIndex. The default unit is nanoseconds, since that is how Timestamp objects are stored internally.

# Create Timestamp

pd.to_datetime(1349720105)Timestamp('1970-01-01 00:00:01.349720105')# Create DatetimeIndex with seconds

pd.to_datetime([1349720105, 1349806505, 1349892905,

1349979305, 1350065705], unit='s')DatetimeIndex(['2012-10-08 18:15:05', '2012-10-09 18:15:05',

'2012-10-10 18:15:05', '2012-10-11 18:15:05',

'2012-10-12 18:15:05'],

dtype='datetime64[ns]', freq=None)Notice that the date values change based on the unit specified.

# Create DatetimeIndex with milliseconds

pd.to_datetime([1349720105, 1349806505, 1349892905,

1349979305, 1350065705], unit='ms')DatetimeIndex(['1970-01-16 14:55:20.105000', '1970-01-16 14:56:46.505000',

'1970-01-16 14:58:12.905000', '1970-01-16 14:59:39.305000',

'1970-01-16 15:01:05.705000'],

dtype='datetime64[ns]', freq=None)pd.to_datetime([1349720105, 1349806505, 1349892905,

1349979305, 1350065705], unit='ns')DatetimeIndex(['1970-01-01 00:00:01.349720105',

'1970-01-01 00:00:01.349806505',

'1970-01-01 00:00:01.349892905',

'1970-01-01 00:00:01.349979305',

'1970-01-01 00:00:01.350065705'],

dtype='datetime64[ns]', freq=None)PeriodIndex

A Timestamp represents a point in time, whereas a Period represents an interval in time. Time Periods can be used to check if a specific event occurs within a certain period, such as when monitoring the number of flights taking off or the average stock price during a period.

A DatetimeIndex object can be converted to a PeriodIndex using the to_period() function by specifying a frequency (such as D to indicate daily frequency).

# Create daily time periods

period_daily = dates.to_period('D')

period_dailyPeriodIndex(['2020-12-25', '2020-07-04', '2018-10-06', '2017-07-07',

'2020-05-08', '2020-04-22'],

dtype='period[D]', freq='D')Since a period represents a time interval, it has a start_time and an end_time.

# Start time of a Period

period_daily.start_timeDatetimeIndex(['2020-12-25', '2020-07-04', '2018-10-06', '2017-07-07',

'2020-05-08', '2020-04-22'],

dtype='datetime64[ns]', freq=None)# End time of a Period

period_daily.end_timeDatetimeIndex(['2020-12-25 23:59:59.999999999',

'2020-07-04 23:59:59.999999999',

'2018-10-06 23:59:59.999999999',

'2017-07-07 23:59:59.999999999',

'2020-05-08 23:59:59.999999999',

'2020-04-22 23:59:59.999999999'],

dtype='datetime64[ns]', freq=None)Note that the start_time and end_time are DatetimeIndex objects because the start and end times are just a snapshot in time of the time period.

To reiterate the concept, let's look at another example.

# Create time period

p1 = pd.Period('2020-12-25')

print('Period is: ', p1)

# Create time stamp

t1 = pd.Timestamp('2020-12-25 18:12')

print('Timestamp is: ', t1)

# Test Time interval

p1.start_time < t1 < p1.end_timePeriod is: 2020-12-25 Timestamp is: 2020-12-25 18:12:00

True

Since Period is an interval of time, the test returns True showing that Timestamp lies within the time interval.

Arithmetic Operations

Now that a frequency is associated with the object, various arithmetic operations can be performed.

# Subtract 30 days

period_daily - 30PeriodIndex(['2020-11-25', '2020-06-04', '2018-09-06', '2017-06-07',

'2020-04-08', '2020-03-23'],

dtype='period[D]', freq='D')# Add 10 days

period_daily + 10PeriodIndex(['2021-01-04', '2020-07-14', '2018-10-16', '2017-07-17',

'2020-05-18', '2020-05-02'],

dtype='period[D]', freq='D')Similarly, we can create time periods with monthly frequency and perform arithmetic operations.

# Create monthly frequency

period_monthly = dates.to_period('M')

period_monthlyPeriodIndex(['2020-12', '2020-07', '2018-10', '2017-07', '2020-05', '2020-04'], dtype='period[M]', freq='M')

# Subtract 12 months

period_monthly - 12PeriodIndex(['2019-12', '2019-07', '2017-10', '2016-07', '2019-05', '2019-04'], dtype='period[M]', freq='M')

# Add 10 months

period_monthly + 10PeriodIndex(['2021-10', '2021-05', '2019-08', '2018-05', '2021-03', '2021-02'], dtype='period[M]', freq='M')

TimedeltaIndex

Time deltas represent the temporal difference between two datetime objects. Time deltas come in handy when you need to calculate the difference between two dates. A TimedeltaIndex can be easily created by subtracting a date from dates.

# Subtract a specific date from dates

dates - pd.to_datetime('2020-05-15')TimedeltaIndex([ '224 days 00:00:00', '50 days 00:00:00',

'-587 days +00:00:00', '-1043 days +00:00:00',

'-7 days +00:00:00', '-23 days +20:34:48'],

dtype='timedelta64[ns]', freq=None)# Subtract date using index

dates - dates[3]TimedeltaIndex(['1267 days 00:00:00', '1093 days 00:00:00',

'456 days 00:00:00', '0 days 00:00:00',

'1036 days 00:00:00', '1020 days 20:34:48'],

dtype='timedelta64[ns]', freq=None)Date Range and Frequency

Regular date sequences can be created using functions, such as pd.date_range() for timestamps, pd.period_range() for periods, and pd.timedelta_range() for time deltas. For many applications, this is sufficient. Fixed frequency, such as daily, monthly, or every 15 minutes, are often desirable. Pandas provides a full suite of standard time series frequencies found here.

- Create a Sequence of Dates - by default, the frequency is daily. Both the start and end dates are included in the result.

pd.date_range('2020-08-03', '2020-08-10')DatetimeIndex(['2020-08-03', '2020-08-04', '2020-08-05', '2020-08-06',

'2020-08-07', '2020-08-08', '2020-08-09', '2020-08-10'],

dtype='datetime64[ns]', freq='D')- Sequence of Dates with Period - alternatively, a date range can be specified with a startpoint and a number of periods.

dt_rng = pd.date_range('2020-08-03', periods=8)

dt_rngDatetimeIndex(['2020-08-03', '2020-08-04', '2020-08-05', '2020-08-06',

'2020-08-07', '2020-08-08', '2020-08-09', '2020-08-10'],

dtype='datetime64[ns]', freq='D')Note that the output when using date_range() is a DatetimeIndex object where each date is a snapshot in time (Timestamp).

dt_rng[0]Timestamp('2020-08-03 00:00:00', freq='D')- Changing the Frequency - the frequency can be modified by altering the

freqargument. Pandas provides a full suite of standard time series frequencies found here.

# Date range with Hourly Frequency

pd.date_range('2020-08-03', periods=8, freq='H')DatetimeIndex(['2020-08-03 00:00:00', '2020-08-03 01:00:00',

'2020-08-03 02:00:00', '2020-08-03 03:00:00',

'2020-08-03 04:00:00', '2020-08-03 05:00:00',

'2020-08-03 06:00:00', '2020-08-03 07:00:00'],

dtype='datetime64[ns]', freq='H')# Date range with Month Start Frequency

pd.date_range('2020-02-03', periods=8, freq='MS')DatetimeIndex(['2020-03-01', '2020-04-01', '2020-05-01', '2020-06-01',

'2020-07-01', '2020-08-01', '2020-09-01', '2020-10-01'],

dtype='datetime64[ns]', freq='MS')- Create a Sequence of Periods

# Period Range with Monthly Frequency

pd.period_range('2020-02-03', periods=8, freq='M')PeriodIndex(['2020-02', '2020-03', '2020-04', '2020-05', '2020-06', '2020-07',

'2020-08', '2020-09'],

dtype='period[M]', freq='M')pd.period_range() generated eight periods with monthly frequency. Note that the output is a PeriodIndex object. As mentioned earlier, Period represents an interval in time, whereas Timestamp represents a point in time.

prd_rng = pd.period_range('2020-02-03', periods=8, freq='D')

prd_rngPeriodIndex(['2020-02-03', '2020-02-04', '2020-02-05', '2020-02-06',

'2020-02-07', '2020-02-08', '2020-02-09', '2020-02-10'],

dtype='period[D]', freq='D')# Print start and end times

print('Start time for period at 0 index: ', prd_rng[0].start_time)

print('End time for period at 0 index: ', prd_rng[0].end_time)Start time for period at 0 index: 2020-02-03 00:00:00 End time for period at 0 index: 2020-02-03 23:59:59.999999999

- Create a Sequence of Durations (Time Deltas)

# Time Deltas with daily frequency

pd.timedelta_range(start='1 day', periods=6)TimedeltaIndex(['1 days', '2 days', '3 days', '4 days', '5 days', '6 days'], dtype='timedelta64[ns]', freq='D')

# Time deltas with hourly frequency

pd.timedelta_range(0, periods=8, freq='H')TimedeltaIndex(['00:00:00', '01:00:00', '02:00:00', '03:00:00', '04:00:00',

'05:00:00', '06:00:00', '07:00:00'],

dtype='timedelta64[ns]', freq='H')# Time deltas with a 6 hour frequency

pd.timedelta_range(start='1 day', end='3 days', freq='6H')TimedeltaIndex(['1 days 00:00:00', '1 days 06:00:00', '1 days 12:00:00',

'1 days 18:00:00', '2 days 00:00:00', '2 days 06:00:00',

'2 days 12:00:00', '2 days 18:00:00', '3 days 00:00:00'],

dtype='timedelta64[ns]', freq='6H')Combining Frequency Codes

Frequency codes can also be combined with numbers to specify other frequencies. For example, a frequency of 1 hour and 30 minutes can be created by combining the hour H and minute T codes.

pd.date_range('2020-08-03', periods=10, freq='1H30T')DatetimeIndex(['2020-08-03 00:00:00', '2020-08-03 01:30:00',

'2020-08-03 03:00:00', '2020-08-03 04:30:00',

'2020-08-03 06:00:00', '2020-08-03 07:30:00',

'2020-08-03 09:00:00', '2020-08-03 10:30:00',

'2020-08-03 12:00:00', '2020-08-03 13:30:00'],

dtype='datetime64[ns]', freq='90T')Similarly, a frequency of 1 day 5 hours and 30 mins can be created by combining the day D, hour H and minute T codes. As an example, we will create a timedelta_range.

pd.timedelta_range(0, periods=10, freq='1D5H30T')TimedeltaIndex([ '0 days 00:00:00', '1 days 05:30:00', '2 days 11:00:00',

'3 days 16:30:00', '4 days 22:00:00', '6 days 03:30:00',

'7 days 09:00:00', '8 days 14:30:00', '9 days 20:00:00',

'11 days 01:30:00'],

dtype='timedelta64[ns]', freq='1770T')Indexing and Selection

Pandas time series tools provide the ability to use dates and times as indices to organize data. This allows for the benefits of indexed data, such as automatic alignment, data slicing, and selection etc.

Pandas was developed with a financial context, so it includes some very specific tools for financial data. The pandas-datareader package (installable via conda install pandas-datareader) can import financial data from a number of available sources. Here, we will load stock price data for GE as an example.

# Get stock data

from pandas_datareader import data

ge = data.DataReader('GE', start='2010', end='2021',

data_source='yahoo')

ge.head()| High | Low | Open | Close | Volume | Adj Close | |

|---|---|---|---|---|---|---|

| Date | ||||||

| 2010-01-04 | 15.038462 | 14.567307 | 14.634615 | 14.855769 | 69763000.0 | 10.840267 |

| 2010-01-05 | 15.067307 | 14.855769 | 14.865385 | 14.932693 | 67132600.0 | 10.896401 |

| 2010-01-06 | 15.019231 | 14.846154 | 14.932693 | 14.855769 | 57683400.0 | 10.840267 |

| 2010-01-07 | 15.846154 | 14.836538 | 14.884615 | 15.625000 | 192891100.0 | 11.401575 |

| 2010-01-08 | 16.048077 | 15.644231 | 15.682693 | 15.961538 | 119717100.0 | 11.647147 |

# Check data type

ge.index.dtypedtype('<M8[ns]')Pandas stores timestamps using NumPy’s datetime64 data type at the nanosecond level. Scalar values from a DatetimeIndex are pandas Timestamp objects.

# Check data type

ge.index[0]Timestamp('2010-01-04 00:00:00')- Select Data for Specific Date

ge.loc['2015-07-06',:]High 2.561539e+01 Low 2.519231e+01 Open 2.550961e+01 Close 2.529808e+01 Volume 2.897240e+07 Adj Close 2.216700e+01 Name: 2015-07-06 00:00:00, dtype: float64

- Dates can be specified in different formats as follows:

print(ge.loc['07/06/2015',:])

print(ge.loc['20150706',:])

print(ge.loc[datetime(2015, 7, 6),:])High 2.561539e+01 Low 2.519231e+01 Open 2.550961e+01 Close 2.529808e+01 Volume 2.897240e+07 Adj Close 2.216700e+01 Name: 2015-07-06 00:00:00, dtype: float64 High 2.561539e+01 Low 2.519231e+01 Open 2.550961e+01 Close 2.529808e+01 Volume 2.897240e+07 Adj Close 2.216700e+01 Name: 2015-07-06 00:00:00, dtype: float64 High 2.561539e+01 Low 2.519231e+01 Open 2.550961e+01 Close 2.529808e+01 Volume 2.897240e+07 Adj Close 2.216700e+01 Name: 2015-07-06 00:00:00, dtype: float64

- Partial string indexing allows for selection using just the year or month

# Using year

ge.loc['2020']| High | Low | Open | Close | Volume | Adj Close | |

|---|---|---|---|---|---|---|

| Date | ||||||

| 2020-01-02 | 11.96 | 11.23 | 11.23 | 11.93 | 87421800.0 | 11.880686 |

| 2020-01-03 | 12.00 | 11.53 | 11.57 | 11.97 | 85885800.0 | 11.920521 |

| 2020-01-06 | 12.21 | 11.84 | 11.84 | 12.14 | 111948700.0 | 12.089818 |

| 2020-01-07 | 12.24 | 11.92 | 12.15 | 12.05 | 70579300.0 | 12.000189 |

| 2020-01-08 | 12.05 | 11.87 | 11.99 | 11.94 | 55402500.0 | 11.890644 |

| ... | ... | ... | ... | ... | ... | ... |

| 2020-11-19 | 9.76 | 9.51 | 9.62 | 9.66 | 87177500.0 | 9.660000 |

| 2020-11-20 | 9.83 | 9.59 | 9.64 | 9.76 | 79923400.0 | 9.760000 |

| 2020-11-23 | 10.27 | 9.86 | 9.86 | 10.07 | 108197300.0 | 10.070000 |

| 2020-11-24 | 10.85 | 10.40 | 10.71 | 10.45 | 175891500.0 | 10.450000 |

| 2020-11-25 | 10.56 | 10.34 | 10.53 | 10.50 | 107645396.0 | 10.500000 |

229 rows × 6 columns

# Using year-month

ge.loc['2020-10']| High | Low | Open | Close | Volume | Adj Close | |

|---|---|---|---|---|---|---|

| Date | ||||||

| 2020-10-01 | 6.29 | 6.11 | 6.27 | 6.24 | 79175600.0 | 6.24 |

| 2020-10-02 | 6.40 | 6.05 | 6.05 | 6.39 | 90076400.0 | 6.39 |

| 2020-10-05 | 6.45 | 6.32 | 6.39 | 6.41 | 58283600.0 | 6.41 |

| 2020-10-06 | 6.58 | 6.11 | 6.43 | 6.17 | 170066200.0 | 6.17 |

| 2020-10-07 | 6.40 | 6.21 | 6.22 | 6.31 | 83286100.0 | 6.31 |

| 2020-10-08 | 6.67 | 6.34 | 6.36 | 6.65 | 103167300.0 | 6.65 |

| 2020-10-09 | 7.07 | 6.70 | 7.07 | 6.84 | 171507500.0 | 6.84 |

| 2020-10-12 | 6.92 | 6.74 | 6.92 | 6.83 | 89036400.0 | 6.83 |

| 2020-10-13 | 6.82 | 6.66 | 6.79 | 6.72 | 75287600.0 | 6.72 |

| 2020-10-14 | 6.89 | 6.72 | 6.72 | 6.82 | 98076200.0 | 6.82 |

| 2020-10-15 | 6.88 | 6.61 | 6.70 | 6.87 | 89252700.0 | 6.87 |

| 2020-10-16 | 7.35 | 6.94 | 6.96 | 7.29 | 169147300.0 | 7.29 |

| 2020-10-19 | 7.47 | 7.23 | 7.39 | 7.29 | 130837100.0 | 7.29 |

| 2020-10-20 | 7.42 | 7.27 | 7.35 | 7.34 | 98420100.0 | 7.34 |

| 2020-10-21 | 7.41 | 7.27 | 7.28 | 7.32 | 73811100.0 | 7.32 |

| 2020-10-22 | 7.75 | 7.32 | 7.33 | 7.72 | 95766900.0 | 7.72 |

| 2020-10-23 | 8.03 | 7.56 | 7.93 | 7.63 | 132563200.0 | 7.63 |

| 2020-10-26 | 7.56 | 7.28 | 7.46 | 7.38 | 104254400.0 | 7.38 |

| 2020-10-27 | 7.40 | 7.09 | 7.40 | 7.10 | 98170000.0 | 7.10 |

| 2020-10-28 | 7.86 | 7.41 | 7.51 | 7.42 | 253494100.0 | 7.42 |

| 2020-10-29 | 7.74 | 7.31 | 7.66 | 7.37 | 123298000.0 | 7.37 |

| 2020-10-30 | 7.54 | 7.29 | 7.34 | 7.42 | 102370100.0 | 7.42 |

- Timestamps can be sliced using the

:notation

# Slice using Dates

ge.loc['2020-05-04':'2020-05-12']| High | Low | Open | Close | Volume | Adj Close | |

|---|---|---|---|---|---|---|

| Date | ||||||

| 2020-05-04 | 6.31 | 6.15 | 6.30 | 6.21 | 136852400.0 | 6.190472 |

| 2020-05-05 | 6.46 | 6.16 | 6.28 | 6.20 | 116998500.0 | 6.180502 |

| 2020-05-06 | 6.25 | 5.97 | 6.20 | 5.98 | 117253600.0 | 5.961195 |

| 2020-05-07 | 6.26 | 6.06 | 6.06 | 6.11 | 100663300.0 | 6.090786 |

| 2020-05-08 | 6.33 | 6.16 | 6.21 | 6.29 | 93934600.0 | 6.270220 |

| 2020-05-11 | 6.25 | 6.13 | 6.24 | 6.19 | 71843000.0 | 6.170535 |

| 2020-05-12 | 6.28 | 6.00 | 6.22 | 6.00 | 95652200.0 | 5.981132 |

# Slice using year-month

ge.loc['2020-05':'2020-07']| High | Low | Open | Close | Volume | Adj Close | |

|---|---|---|---|---|---|---|

| Date | ||||||

| 2020-05-01 | 6.74 | 6.41 | 6.67 | 6.50 | 120376500.0 | 6.479559 |

| 2020-05-04 | 6.31 | 6.15 | 6.30 | 6.21 | 136852400.0 | 6.190472 |

| 2020-05-05 | 6.46 | 6.16 | 6.28 | 6.20 | 116998500.0 | 6.180502 |

| 2020-05-06 | 6.25 | 5.97 | 6.20 | 5.98 | 117253600.0 | 5.961195 |

| 2020-05-07 | 6.26 | 6.06 | 6.06 | 6.11 | 100663300.0 | 6.090786 |

| ... | ... | ... | ... | ... | ... | ... |

| 2020-07-27 | 6.85 | 6.69 | 6.84 | 6.71 | 70704000.0 | 6.698927 |

| 2020-07-28 | 6.96 | 6.69 | 6.70 | 6.89 | 76033600.0 | 6.878630 |

| 2020-07-29 | 7.00 | 6.52 | 6.99 | 6.59 | 148442400.0 | 6.579125 |

| 2020-07-30 | 6.51 | 6.26 | 6.50 | 6.26 | 127526900.0 | 6.249670 |

| 2020-07-31 | 6.29 | 6.00 | 6.25 | 6.07 | 142731700.0 | 6.059984 |

64 rows × 6 columns

Resampling, Shifting, and Windowing

Resampling

The process of converting a time series from one frequency to another is called Resampling. When higher frequency data is aggregated to lower frequency, it is called downsampling, while converting lower frequency to higher frequency is called upsampling. For simplicity, we'll use just the closing price Close data.

ge = ge['Close']

ge.head()Date 2010-01-04 14.855769 2010-01-05 14.932693 2010-01-06 14.855769 2010-01-07 15.625000 2010-01-08 15.961538 Name: Close, dtype: float64

Resampling can be done using the resample() method, or the much simpler asfreq() method.

resample(): is a data aggregation method. It is a flexible and high-performance method that can be used to process very large time series.asfreq(): is a data selection method.

We will downsample the data using 'business year end' frequency BA and create a plot of the data returned after applying the two functions.

# Using resample()

ge.resample('BA').mean()Date 2010-12-31 15.893658 2011-12-30 17.434333 2012-12-31 19.453038 2013-12-31 23.083371 2014-12-31 24.994277 2015-12-31 25.767819 2016-12-30 29.180174 2017-12-29 24.972418 2018-12-31 12.402774 2019-12-31 9.782701 2020-12-31 7.963930 Freq: BA-DEC, Name: Close, dtype: float64

# Using asfreq()

ge.asfreq('BA')Date 2010-12-31 17.586538 2011-12-30 17.221153 2012-12-31 20.182692 2013-12-31 26.951923 2014-12-31 24.298077 2015-12-31 29.951923 2016-12-30 30.384615 2017-12-29 16.778847 2018-12-31 7.278846 2019-12-31 11.160000 Freq: BA-DEC, Name: Close, dtype: float64

Downsample Plot

Plot the down-sampled data to compare the returned data of the two functions.

# Import Plotting libraries

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn; seaborn.set()# Plot data

plt.figure(figsize=(15,10))

ge.plot(alpha=0.5, style='-')

ge.resample('BA').mean().plot(style=':')

ge.asfreq('BA').plot(style='--');

plt.legend(['input', 'resample', 'asfreq'],

loc='upper left');

We can see that at each point, resample returns the average of the previous year, as shown by the dotted line, while asfreq reports the value at the end of the year, as shown by dashed line.

Upsampling involves converting from a low frequency to a higher frequency where no aggregation is needed. resample() and asfreq() are largely equivalent in the case of upsampling. The default for both methods is to leave the up-sampled points empty (filled with NA values). The asfreq() method accepts arguments to specify how values are imputed.

We will subset the data and then upsample with daily D frequency.

# Subset Data

ge_up_data = ge.iloc[:10]

ge_up_dataDate 2010-01-04 14.855769 2010-01-05 14.932693 2010-01-06 14.855769 2010-01-07 15.625000 2010-01-08 15.961538 2010-01-11 16.115385 2010-01-12 16.125000 2010-01-13 16.182692 2010-01-14 16.057692 2010-01-15 15.807693 Name: Close, dtype: float64

# Upsample with Daily frequency

ge_up_data.asfreq('D')Date 2010-01-04 14.855769 2010-01-05 14.932693 2010-01-06 14.855769 2010-01-07 15.625000 2010-01-08 15.961538 2010-01-09 NaN 2010-01-10 NaN 2010-01-11 16.115385 2010-01-12 16.125000 2010-01-13 16.182692 2010-01-14 16.057692 2010-01-15 15.807693 Freq: D, Name: Close, dtype: float64

The default is to leave the up-sampled points empty (filled with NA values). Forward ffill or Backward bfill methods can be used to impute missing values.

# Using forward fill

ge_up_data.asfreq('D', method='ffill')Date 2010-01-04 14.855769 2010-01-05 14.932693 2010-01-06 14.855769 2010-01-07 15.625000 2010-01-08 15.961538 2010-01-09 15.961538 2010-01-10 15.961538 2010-01-11 16.115385 2010-01-12 16.125000 2010-01-13 16.182692 2010-01-14 16.057692 2010-01-15 15.807693 Freq: D, Name: Close, dtype: float64

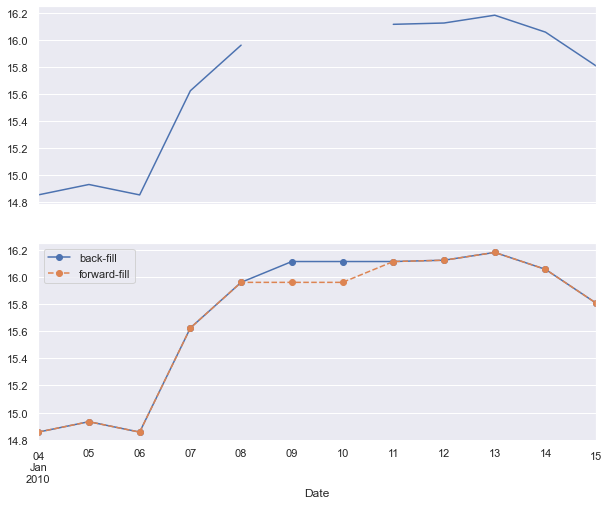

Upsample Plot

Plot the up-sampled data to compare the data returned from various fill methods.

fig, ax = plt.subplots(2, figsize=(10,8), sharex=True)

ge_up_data.asfreq('D').plot(ax=ax[0])

ge_up_data.asfreq('D', method='bfill').plot(ax=ax[1], style='-o')

ge_up_data.asfreq('D', method='ffill').plot(ax=ax[1], style='--o')

ax[1].legend(["back-fill", "forward-fill"]);

The top plot shows upsampled data using a daily frequency with default settings where non-business days are NA values that do not appear on the plot. The bottom plot shows forward and backward fill strategies for filling the gaps.

Shifting

A common use case of time series is shifting of data in time i.e. moving data backward and forward through time. Pandas includes shift() and tshift() methods for shifting data.

shift()- shifts the datatshift()- shifts the index

In both cases, the shift is specified in multiples of the frequency. Let's look at some examples.

# Print Data

ge_up_dataDate 2010-01-04 14.855769 2010-01-05 14.932693 2010-01-06 14.855769 2010-01-07 15.625000 2010-01-08 15.961538 2010-01-11 16.115385 2010-01-12 16.125000 2010-01-13 16.182692 2010-01-14 16.057692 2010-01-15 15.807693 Name: Close, dtype: float64

# Shift Forward

ge_up_data.shift(2)Date 2010-01-04 NaN 2010-01-05 NaN 2010-01-06 14.855769 2010-01-07 14.932693 2010-01-08 14.855769 2010-01-11 15.625000 2010-01-12 15.961538 2010-01-13 16.115385 2010-01-14 16.125000 2010-01-15 16.182692 Name: Close, dtype: float64

# Shift Backward

ge_up_data.shift(-2)Date 2010-01-04 14.855769 2010-01-05 15.625000 2010-01-06 15.961538 2010-01-07 16.115385 2010-01-08 16.125000 2010-01-11 16.182692 2010-01-12 16.057692 2010-01-13 15.807693 2010-01-14 NaN 2010-01-15 NaN Name: Close, dtype: float64

Both forward and backward shift() opertions shift the data leaving the index unmodified. Let's look at how index is modified with tshift().

# Shift backward with Index

ge_up_data.tshift(-2)Date 2009-12-31 14.855769 2010-01-01 14.932693 2010-01-04 14.855769 2010-01-05 15.625000 2010-01-06 15.961538 2010-01-07 16.115385 2010-01-08 16.125000 2010-01-11 16.182692 2010-01-12 16.057692 2010-01-13 15.807693 Freq: B, Name: Close, dtype: float64

The index for the original data ranges from 2008-01-02 - 2008-01-15. Using thsift() for shifting backward, we see that the index now ranges from 2007-12-31 - 2008-01-11. Shift takes the same frequency as the frequency of datetime.

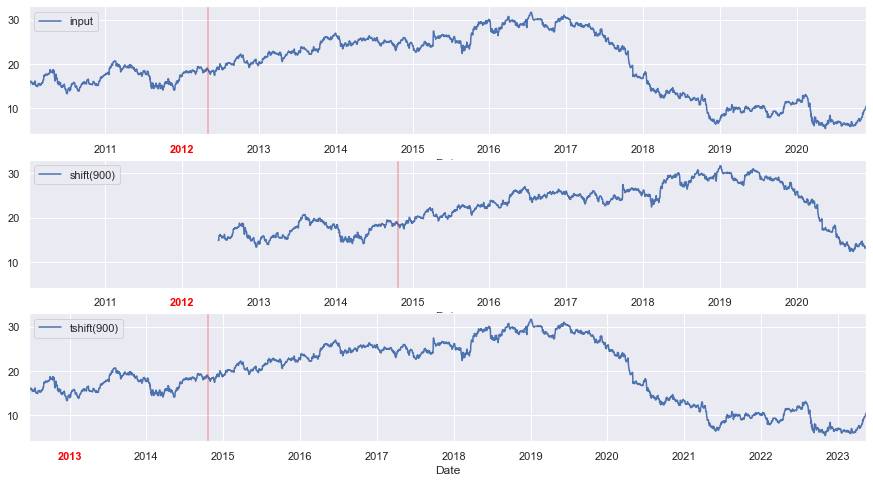

Plot the Data

Let's look at another example of shifting data using shift() and tshift() to shift the ge data. We will plot the data to visualize the differences.

fig, ax = plt.subplots(3, figsize=(15,8), sharey=True)

# apply a frequency to the data

ge = ge.asfreq('D', method='pad')

# shift the data

ge.plot(ax=ax[0])

ge.shift(900).plot(ax=ax[1])

ge.tshift(900).plot(ax=ax[2])

# legends and annotations

local_max = pd.to_datetime('2012-05-05')

offset = pd.Timedelta(900, 'D')

ax[0].legend(['input'], loc=2)

ax[0].get_xticklabels()[2].set(weight='heavy', color='red')

ax[0].axvline(local_max, alpha=0.3, color='red')

ax[1].legend(['shift(900)'], loc=2)

ax[1].get_xticklabels()[2].set(weight='heavy', color='red')

ax[1].axvline(local_max + offset, alpha=0.3, color='red')

ax[2].legend(['tshift(900)'], loc=2)

ax[2].get_xticklabels()[1].set(weight='heavy', color='red')

ax[2].axvline(local_max + offset, alpha=0.3, color='red');

The top panel in the plot shows ge data with a red line showing a local date. The middle panel shows the shift(900) operation which shifts the data by 900 days, leaving NA values at early indices. This is represented by the fact that there is no line on the plot for first 900 days. The bottom panel shows the tshift(900) operation, which shifts the index by 900 days, changing the start and end date ranges as shown.

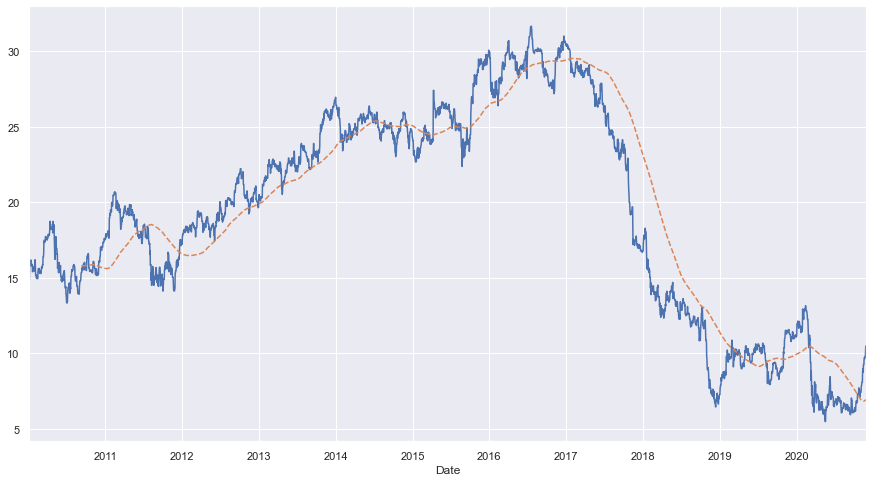

Rolling Window

Rolling statistics are another time series specific operation where data is evaluated over a sliding window. Rolling operations are useful for smoothing noisy data. The rolling() operator behaves similarly to resample and groupby operations, but instead of grouping, it enables grouping over a sliding window.

plt.figure(figsize=(15,8))

# plot data

ge.plot()

# plot 250 day rolling mean

ge.rolling(250).mean().plot(style='--');

The plot shows GE stock price data. The dashed line represents 250-day moving window average of the stock price.

Time Zones

We live in a global world where many companies operate in different time zones. This makes it crucial to carefully analyze the data based on the correct time zone. Many users work with time series in UTC (coordinated universal time) time which is the current international standard. Time zones are expressed as offsets from UTC; for example, California is seven hours behind UTC during daylight saving time (DST) and eight hours behind the rest of the year.

Localization and Conversion

Time series objects in Pandas do not have an assigned time zone by default. Let's consider the GE stock price ge data as an example.

# print data

ge.head()Date 2010-01-04 14.855769 2010-01-05 14.932693 2010-01-06 14.855769 2010-01-07 15.625000 2010-01-08 15.961538 Freq: D, Name: Close, dtype: float64

# check timezone

print(ge.index.tz)None

The index's tz field is None. We can assign a time zone using tz_localize method.

# localize timezone

ge_utc = ge.tz_localize('UTC')

ge_utc.indexDatetimeIndex(['2010-01-04 00:00:00+00:00', '2010-01-05 00:00:00+00:00',

'2010-01-06 00:00:00+00:00', '2010-01-07 00:00:00+00:00',

'2010-01-08 00:00:00+00:00', '2010-01-09 00:00:00+00:00',

'2010-01-10 00:00:00+00:00', '2010-01-11 00:00:00+00:00',

'2010-01-12 00:00:00+00:00', '2010-01-13 00:00:00+00:00',

...

'2020-11-16 00:00:00+00:00', '2020-11-17 00:00:00+00:00',

'2020-11-18 00:00:00+00:00', '2020-11-19 00:00:00+00:00',

'2020-11-20 00:00:00+00:00', '2020-11-21 00:00:00+00:00',

'2020-11-22 00:00:00+00:00', '2020-11-23 00:00:00+00:00',

'2020-11-24 00:00:00+00:00', '2020-11-25 00:00:00+00:00'],

dtype='datetime64[ns, UTC]', name='Date', length=3979, freq='D')Once a time series has been localized to a particular time zone, it can be easily converted to another time zone with tz_convert.

ge_ny = ge_utc.tz_convert('America/New_York')

ge_ny.indexDatetimeIndex(['2010-01-03 19:00:00-05:00', '2010-01-04 19:00:00-05:00',

'2010-01-05 19:00:00-05:00', '2010-01-06 19:00:00-05:00',

'2010-01-07 19:00:00-05:00', '2010-01-08 19:00:00-05:00',

'2010-01-09 19:00:00-05:00', '2010-01-10 19:00:00-05:00',

'2010-01-11 19:00:00-05:00', '2010-01-12 19:00:00-05:00',

...

'2020-11-15 19:00:00-05:00', '2020-11-16 19:00:00-05:00',

'2020-11-17 19:00:00-05:00', '2020-11-18 19:00:00-05:00',

'2020-11-19 19:00:00-05:00', '2020-11-20 19:00:00-05:00',

'2020-11-21 19:00:00-05:00', '2020-11-22 19:00:00-05:00',

'2020-11-23 19:00:00-05:00', '2020-11-24 19:00:00-05:00'],

dtype='datetime64[ns, America/New_York]', name='Date', length=3979, freq='D')Epoch time can be read as timezone-naive timestamps and then localized to the appropriate timezone using the tz_localize method.

# Localize epoch as a timestamp with US/Pacific timezone

pd.Timestamp(1262347200000000000).tz_localize('US/Pacific')Timestamp('2010-01-01 12:00:00-0800', tz='US/Pacific')# Localize epoch as a DatetimeIndex with UTC timezone

pd.DatetimeIndex([1262347200000000000]).tz_localize('UTC')DatetimeIndex(['2010-01-01 12:00:00+00:00'], dtype='datetime64[ns, UTC]', freq=None)

Operating between TIme Zones

If two time series with different time zones are combined, the result will be UTC.

# create two time series with different time zones

ge_la = ge_utc.tz_convert('America/Los_Angeles')

ts1 = ge_ny[:7]

print(ts1)

print('Timezone of ts1: ', ts1.index.tz)

print()

ts2 = ge_la[:7]

print(ts2)

print('Timezone of ts2: ', ts2.index.tz)Date 2010-01-03 19:00:00-05:00 14.855769 2010-01-04 19:00:00-05:00 14.932693 2010-01-05 19:00:00-05:00 14.855769 2010-01-06 19:00:00-05:00 15.625000 2010-01-07 19:00:00-05:00 15.961538 2010-01-08 19:00:00-05:00 15.961538 2010-01-09 19:00:00-05:00 15.961538 Freq: D, Name: Close, dtype: float64 Timezone of ts1: America/New_York Date 2010-01-03 16:00:00-08:00 14.855769 2010-01-04 16:00:00-08:00 14.932693 2010-01-05 16:00:00-08:00 14.855769 2010-01-06 16:00:00-08:00 15.625000 2010-01-07 16:00:00-08:00 15.961538 2010-01-08 16:00:00-08:00 15.961538 2010-01-09 16:00:00-08:00 15.961538 Freq: D, Name: Close, dtype: float64 Timezone of ts2: America/Los_Angeles

# add two time series

ts3 = ts1 + ts2

print(ts3)

print('Timezone of ts3: ', ts3.index.tz)Date 2010-01-04 00:00:00+00:00 29.711538 2010-01-05 00:00:00+00:00 29.865385 2010-01-06 00:00:00+00:00 29.711538 2010-01-07 00:00:00+00:00 31.250000 2010-01-08 00:00:00+00:00 31.923077 2010-01-09 00:00:00+00:00 31.923077 2010-01-10 00:00:00+00:00 31.923077 Freq: D, Name: Close, dtype: float64 Timezone of ts3: UTC

Common Time Zones

Time zone information in python comes from a third party library called pytz (installable using conda install pytz). Let's look at some examples.

# import library

import pytz# common time zones

pytz.common_timezones[-10:]['Pacific/Wake', 'Pacific/Wallis', 'US/Alaska', 'US/Arizona', 'US/Central', 'US/Eastern', 'US/Hawaii', 'US/Mountain', 'US/Pacific', 'UTC']

To get a time zone object, pytz.timezone can be used.

tz = pytz.timezone('US/Pacific')

tz<DstTzInfo 'US/Pacific' LMT-1 day, 16:07:00 STD>

Common Use Cases

Importing data is the first step in any data science project. Often, you’ll work with data that contains date and time elements. In this section, we will see how to: 1. Read date columns from data. 2. Split a column with date and time into separate columns. 3. Combine different date and time columns to form a datetime column.

We will use sample earthquake data with date and time information to illustrate this example. The data is stored in '.csv' format as an item. We will download the '.csv' file in a folder, as shown below, and then import the data for analysis.

Note: the dataset used in this example has been curated for illustration purposes.

# Get item with data

import os

from arcgis import GIS

gis = GIS()

earthquake_item = gis.content.get('008fcafa23a24351b4f37f7c8a542cb1')

earthquake_item# Download data item

# Create folder for file download

data_folder = '../../samples_data'

if not os.path.exists(data_folder):

os.makedirs(data_folder)

print(f'Created data folder at: {data_folder}')

else:

print(f'Using existing data folder at: {data_folder}')

# Download file

filename = 'earthquakes_data.csv'

if not os.path.exists(os.path.join(data_folder, filename)):

earthquake_item.download(data_folder, 'earthquakes_data.csv')

print(f'{filename} downloaded')

else:

print(f'{filename} exists')Created data folder at: ../../samples_data earthquakes_data.csv downloaded

Import data with date/time

Data with dates can be easily imported as datetime by setting the parse_dates parameter. Let's import the data and check the data types.

# Read data

quake_data = pd.read_csv('./samples_data/earthquakes_data.csv', parse_dates=['datetime'])

quake_data.head()| datetime | latitude | longitude | depth | magnitude | |

|---|---|---|---|---|---|

| 0 | 1973-08-09 02:18:00 | 40.260 | -124.233 | 2.0 | 5.1 |

| 1 | 1976-11-27 02:49:00 | 40.998 | -120.447 | 5.0 | 5.0 |

| 2 | 1977-02-22 06:24:00 | 38.478 | -119.287 | 5.0 | 5.0 |

| 3 | 1978-09-04 21:54:00 | 38.814 | -119.811 | 14.0 | 5.2 |

| 4 | 1979-10-07 20:54:00 | 38.224 | -119.348 | 11.0 | 5.2 |

# Check Data Types

quake_data.dtypesdatetime datetime64[ns] latitude float64 longitude float64 depth float64 magnitude float64 dtype: object

The column with date and time information is imported as a datetime data type.

Split into multiple columns

To split a column with date and time information into separate columns, Series.dt can be used to access the values of the series such as year, month, day etc.

# Create new columns

quake_data['date'] = quake_data['datetime'].dt.date

quake_data['time'] = quake_data['datetime'].dt.time

quake_data['year'] = quake_data['datetime'].dt.year

quake_data['month'] = quake_data['datetime'].dt.month

quake_data['day'] = quake_data['datetime'].dt.day

quake_data['hour'] = quake_data['datetime'].dt.hour

quake_data['minute'] = quake_data['datetime'].dt.minute

quake_data['second'] = quake_data['datetime'].dt.second# Check dataset

quake_data.head()| datetime | latitude | longitude | depth | magnitude | date | time | year | month | day | hour | minute | second | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1973-08-09 02:18:00 | 40.260 | -124.233 | 2.0 | 5.1 | 1973-08-09 | 02:18:00 | 1973 | 8 | 9 | 2 | 18 | 0 |

| 1 | 1976-11-27 02:49:00 | 40.998 | -120.447 | 5.0 | 5.0 | 1976-11-27 | 02:49:00 | 1976 | 11 | 27 | 2 | 49 | 0 |

| 2 | 1977-02-22 06:24:00 | 38.478 | -119.287 | 5.0 | 5.0 | 1977-02-22 | 06:24:00 | 1977 | 2 | 22 | 6 | 24 | 0 |

| 3 | 1978-09-04 21:54:00 | 38.814 | -119.811 | 14.0 | 5.2 | 1978-09-04 | 21:54:00 | 1978 | 9 | 4 | 21 | 54 | 0 |

| 4 | 1979-10-07 20:54:00 | 38.224 | -119.348 | 11.0 | 5.2 | 1979-10-07 | 20:54:00 | 1979 | 10 | 7 | 20 | 54 | 0 |

New columns have been created for various date and time information.

# Check data types

quake_data.dtypesdatetime datetime64[ns] latitude float64 longitude float64 depth float64 magnitude float64 date object time object year int64 month int64 day int64 hour int64 minute int64 second int64 dtype: object

Notice that date column is of object data type. It can be easily converted to a datetime object using pd.to_datetime.

quake_data['date'] = pd.to_datetime(quake_data['date'])

quake_data.dtypesdatetime datetime64[ns] latitude float64 longitude float64 depth float64 magnitude float64 date datetime64[ns] time object year int64 month int64 day int64 hour int64 minute int64 second int64 dtype: object

Combine columns with Date/Time information

Consider a scenario where the data did not have a datetime column but the year, month, day, hour, minute, second date and time elements were stored as individual columns as shown below.

# Create data

quake_data.drop(columns=['datetime'], inplace=True)

quake_data.head()| latitude | longitude | depth | magnitude | date | time | year | month | day | hour | minute | second | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 40.260 | -124.233 | 2.0 | 5.1 | 1973-08-09 | 02:18:00 | 1973 | 8 | 9 | 2 | 18 | 0 |

| 1 | 40.998 | -120.447 | 5.0 | 5.0 | 1976-11-27 | 02:49:00 | 1976 | 11 | 27 | 2 | 49 | 0 |

| 2 | 38.478 | -119.287 | 5.0 | 5.0 | 1977-02-22 | 06:24:00 | 1977 | 2 | 22 | 6 | 24 | 0 |

| 3 | 38.814 | -119.811 | 14.0 | 5.2 | 1978-09-04 | 21:54:00 | 1978 | 9 | 4 | 21 | 54 | 0 |

| 4 | 38.224 | -119.348 | 11.0 | 5.2 | 1979-10-07 | 20:54:00 | 1979 | 10 | 7 | 20 | 54 | 0 |

In such a scenario, a datetime object can be easily created by using the pd.to_to_datetime method. The method combines date and time information in various columns and returns a datetime64 object.

# Create new datetime column

quake_data['new_datetime'] = pd.to_datetime(quake_data[["year", "month", "day", "hour", "minute", "second"]])

quake_data.head()| latitude | longitude | depth | magnitude | date | time | year | month | day | hour | minute | second | new_datetime | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 40.260 | -124.233 | 2.0 | 5.1 | 1973-08-09 | 02:18:00 | 1973 | 8 | 9 | 2 | 18 | 0 | 1973-08-09 02:18:00 |

| 1 | 40.998 | -120.447 | 5.0 | 5.0 | 1976-11-27 | 02:49:00 | 1976 | 11 | 27 | 2 | 49 | 0 | 1976-11-27 02:49:00 |

| 2 | 38.478 | -119.287 | 5.0 | 5.0 | 1977-02-22 | 06:24:00 | 1977 | 2 | 22 | 6 | 24 | 0 | 1977-02-22 06:24:00 |

| 3 | 38.814 | -119.811 | 14.0 | 5.2 | 1978-09-04 | 21:54:00 | 1978 | 9 | 4 | 21 | 54 | 0 | 1978-09-04 21:54:00 |

| 4 | 38.224 | -119.348 | 11.0 | 5.2 | 1979-10-07 | 20:54:00 | 1979 | 10 | 7 | 20 | 54 | 0 | 1979-10-07 20:54:00 |

# Check data types

quake_data.dtypeslatitude float64 longitude float64 depth float64 magnitude float64 date datetime64[ns] time object year int64 month int64 day int64 hour int64 minute int64 second int64 new_datetime datetime64[ns] dtype: object

Conclusion

In this part of the guide series, you have seen in detail how to work with Time Series data. Here, we briefly introduced date and time data types in native python and then focused on date/time data in Pandas. You have seen how date_range can be created with frequencies. We discussed various indexing and selection operations on time series data. Next, we introduced time series specific operations, such as resmaple(), shift(), tshift() and rolling(). We also briefly discussed time zones and operating on data with different time zones.

References

[1] Wes McKinney. 2017. Python for Data Analysis: Data Wrangling with Pandas, NumPy, and IPython (2nd. ed.). O'Reilly Media, Inc.

[2] Jake VanderPlas. 2016. Python Data Science Handbook: Essential Tools for Working with Data (1st. ed.). O'Reilly Media, Inc.