- 🔬 Data Science

- 🥠 Deep Learning and Object Detection

Coconuts and coconut products are an important commodity in the Tongan economy. Plantations, such as one in the town of Kolovai, have thousands of trees. Inventorying each of these trees by hand would require a lot of time and resources. Alternatively, tree health and location can be surveyed using remote sensing and deep learning.

In this notebook, we will train a deep learning model to detect palm trees in high-resolution imagery of the Kolovai region using the arcgis.learn module of the ArGIS API for Python.

Export training data

The first step is to find imagery that shows Kolovai, Tonga, and has a fine enough spatial and spectral resolution to identify trees. Once we have the imagery, we'll export training samples to a format that can be used by a deep learning model.

Download the imagery

Accurate and high-resolution imagery is essential when extracting features. The model will only be able to identify the palm trees if the pixel size is small enough to distinguish palm canopies. Additionally, to calculate tree health, we'll need an image with spectral bands that will enable you to generate a vegetation health index. You'll find and download the imagery for this study from OpenAerialMap, an open-source repository of high-resolution, multispectral imagery.

-

Go to the OpenAerialMap website.

In the interactive map view, you can zoom, pan, and search for imagery available anywhere on the planet. The map is broken up into grids. When you point to a grid box, a number appears. This number indicates the number of available images for that box.

- In the search box, type Kolovai and press Enter. In the list of results, click Kolovai. The map zooms to Kolovai. This is a town on the main island of Tongatapu with a coconut plantation.

- If necessary, zoom out until you see the label for Kolovai on the map. Click the grid box directly over Kolovai.

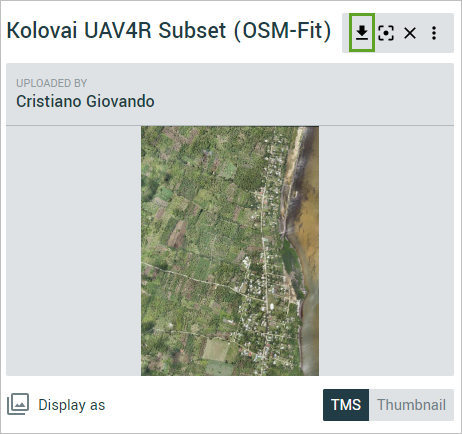

- In the side pane, click Kolovai UAV4R Subset (OSM-Fit) by Cristiano Giovando.

- Click the download button to download the raw .tif file. Save the image to a location of your choice.

Because of the file size, download may take a few minutes. The default name of the file is 5b1b6fb2-5024-4681-a175-9b667174f48c.

The spatial resolution of the kolovai imagery is 9 cm, and it contains 3 bands: Red, Green, and Blue. It is used as the 'Input Raster' for exporting the training data.

Get palm labels

# Connect to GIS

from arcgis.gis import GIS

gis = GIS("home")The following feature layaer collection contains 2 layers, labelled palm trees of a part of Kolovai region and a mask that delineates the area where image chips will be created.

palm_label = gis.content.get('1bc2daa8960340ee92ea68ddb35ab4d4')

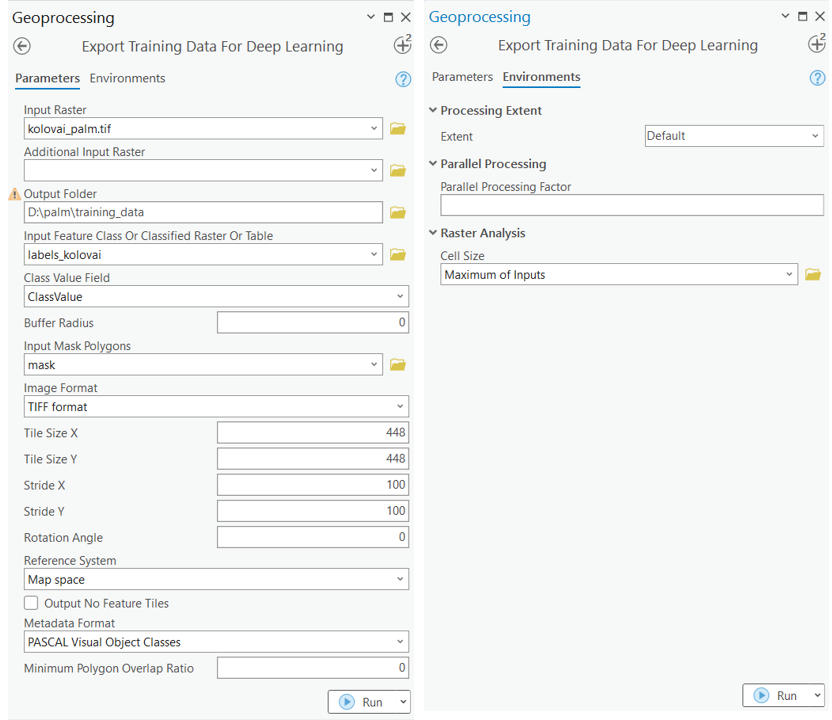

palm_labelTraining data can be exported by using the Export Training Data For Deep Learning tool available in ArcGIS Pro. For this example, we prepared the training data in the 'PASCAL Visual Object Classes' format, using a 'chip_size' of 448px and a 'cell_size' of 0.085, in ArcGIS Pro. The 'Input Raster' and the 'Input Feature Class' have been made available to export the required training data. We have also provided the exported training data in the next section, if you wish to skip this step.

Train DetREG model

Necessary imports

import os

import zipfile

from pathlib import Path

from arcgis.learn import prepare_data, DETRegGet training data

training_data = gis.content.get('e7878a3fab0a400f9665d800972395f1')

training_datafilepath = training_data.download(file_name=training_data.name)import zipfile

with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)data_path = Path(os.path.join(os.path.splitext(filepath)[0]))

data_pathWindowsPath('C:/Users/pri10421/AppData/Local/Temp/detecting_palm_trees_using_deep_learning')path: path of the folder/list of folders containing training data.batch_size: Number of images your model will train on each step inside an epoch. Depends on the memory of your graphic card.chip_size: The same as the tile size used while exporting the dataset.class_mapping: map label id to string

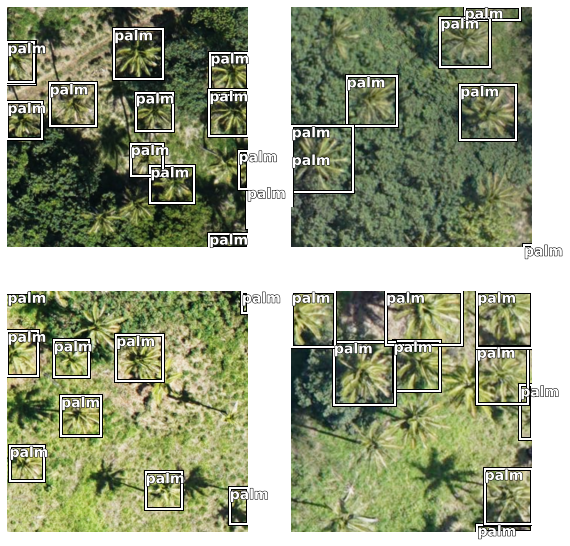

data = prepare_data(data_path, batch_size=8, chip_size=448, class_mapping={'1': 'palm'})Visualize training data

To get a sense of what the training data looks like, the show_batch() method will randomly pick a few training chips and visualize them.

data.show_batch()

data.classes['background', 'palm']

Load model architecture

DetREG model pretrains the entire object detection network, including the object localization and embedding components. During pretraining, DETReg predicts object localizations to match the localizations from an unsupervised region proposal generator and simultaneously aligns the corresponding feature embeddings with embeddings from a self-supervised image encoder. Through the integration of DetREG model in argis.learn, we could train a deep learning model with small training data ~50 images.

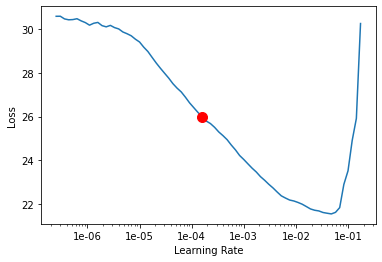

detreg_model = DETReg(data)detreg_model.lr_find()

0.0001584893192461114

We will train the model for a few epochs with the learning rate 2e-5.

detreg_model.fit(epochs=100, lr=0.0001584893192461114)| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 27.228205 | 21.371485 | 00:05 |

| 1 | 25.111946 | 19.466047 | 00:05 |

| 2 | 24.023035 | 19.080370 | 00:05 |

| 3 | 23.042629 | 18.077059 | 00:05 |

| 4 | 22.433546 | 17.609043 | 00:05 |

| 5 | 21.971066 | 17.477543 | 00:05 |

| 6 | 21.543530 | 17.539631 | 00:05 |

| 7 | 21.194422 | 16.902733 | 00:05 |

| 8 | 20.830856 | 16.439713 | 00:05 |

| 9 | 20.541531 | 16.078609 | 00:05 |

| 10 | 20.211823 | 15.211130 | 00:06 |

| 11 | 19.793268 | 15.069654 | 00:06 |

| 12 | 19.236372 | 17.090498 | 00:05 |

| 13 | 18.841959 | 17.125479 | 00:05 |

| 14 | 18.371445 | 14.950435 | 00:06 |

| 15 | 18.024601 | 15.850225 | 00:05 |

| 16 | 17.745529 | 15.506917 | 00:05 |

| 17 | 17.320305 | 14.082644 | 00:05 |

| 18 | 16.989925 | 14.379087 | 00:05 |

| 19 | 16.624977 | 14.170962 | 00:05 |

| 20 | 16.306162 | 14.255770 | 00:05 |

| 21 | 16.005445 | 14.173002 | 00:05 |

| 22 | 15.673724 | 13.711618 | 00:05 |

| 23 | 15.444551 | 13.366743 | 00:05 |

| 24 | 15.234297 | 12.996747 | 00:05 |

| 25 | 14.945394 | 12.927656 | 00:05 |

| 26 | 14.677604 | 11.008638 | 00:05 |

| 27 | 14.339445 | 11.804110 | 00:05 |

| 28 | 13.932788 | 10.150434 | 00:05 |

| 29 | 13.616297 | 10.496364 | 00:05 |

| 30 | 13.328455 | 9.226864 | 00:05 |

| 31 | 12.993720 | 9.059042 | 00:05 |

| 32 | 12.675693 | 8.921451 | 00:05 |

| 33 | 12.361510 | 8.618605 | 00:05 |

| 34 | 12.109262 | 8.187807 | 00:05 |

| 35 | 11.843448 | 8.006143 | 00:05 |

| 36 | 11.580905 | 7.839478 | 00:05 |

| 37 | 11.396687 | 8.260223 | 00:05 |

| 38 | 11.183165 | 8.255926 | 00:05 |

| 39 | 11.038754 | 8.601174 | 00:05 |

| 40 | 10.867735 | 8.106578 | 00:05 |

| 41 | 10.633966 | 7.477275 | 00:05 |

| 42 | 10.513922 | 7.425682 | 00:05 |

| 43 | 10.337910 | 7.741408 | 00:05 |

| 44 | 10.160110 | 7.209971 | 00:05 |

| 45 | 10.002899 | 7.223466 | 00:05 |

| 46 | 9.876152 | 6.967088 | 00:05 |

| 47 | 9.810612 | 6.901792 | 00:05 |

| 48 | 9.686474 | 7.057906 | 00:05 |

| 49 | 9.444827 | 6.781811 | 00:05 |

| 50 | 9.302439 | 6.902074 | 00:05 |

| 51 | 9.241193 | 6.639427 | 00:05 |

| 52 | 9.113286 | 6.572421 | 00:06 |

| 53 | 8.969366 | 6.207922 | 00:05 |

| 54 | 8.828690 | 6.044482 | 00:05 |

| 55 | 8.696461 | 6.046604 | 00:05 |

| 56 | 8.519960 | 5.802986 | 00:06 |

| 57 | 8.325386 | 5.654331 | 00:05 |

| 58 | 8.196836 | 5.668161 | 00:05 |

| 59 | 8.051723 | 5.606136 | 00:05 |

| 60 | 7.943853 | 5.424738 | 00:05 |

| 61 | 7.860847 | 5.230558 | 00:05 |

| 62 | 7.759712 | 5.601700 | 00:05 |

| 63 | 7.606007 | 5.102762 | 00:05 |

| 64 | 7.475172 | 5.111871 | 00:05 |

| 65 | 7.379089 | 4.985932 | 00:06 |

| 66 | 7.253640 | 5.120059 | 00:05 |

| 67 | 7.168691 | 4.926324 | 00:06 |

| 68 | 7.050176 | 4.991344 | 00:05 |

| 69 | 6.943080 | 4.863623 | 00:06 |

| 70 | 6.799272 | 4.917822 | 00:05 |

| 71 | 6.720453 | 4.866461 | 00:05 |

| 72 | 6.641360 | 5.344783 | 00:05 |

| 73 | 6.518205 | 5.011573 | 00:05 |

| 74 | 6.420632 | 4.989506 | 00:05 |

| 75 | 6.319081 | 4.769677 | 00:05 |

| 76 | 6.254847 | 4.674143 | 00:05 |

| 77 | 6.178284 | 4.656699 | 00:05 |

| 78 | 6.088454 | 5.013444 | 00:05 |

| 79 | 6.066946 | 4.740726 | 00:05 |

| 80 | 6.027186 | 4.595988 | 00:05 |

| 81 | 5.943243 | 4.629683 | 00:05 |

| 82 | 5.904345 | 4.553323 | 00:05 |

| 83 | 5.871964 | 4.530498 | 00:05 |

| 84 | 5.818938 | 4.541087 | 00:05 |

| 85 | 5.801239 | 4.449792 | 00:05 |

| 86 | 5.812011 | 4.436050 | 00:05 |

| 87 | 5.764916 | 4.414875 | 00:05 |

| 88 | 5.715053 | 4.432138 | 00:05 |

| 89 | 5.690810 | 4.457049 | 00:05 |

| 90 | 5.647766 | 4.383811 | 00:05 |

| 91 | 5.622376 | 4.369289 | 00:05 |

| 92 | 5.582300 | 4.385396 | 00:06 |

| 93 | 5.614410 | 4.412127 | 00:06 |

| 94 | 5.540917 | 4.408393 | 00:05 |

| 95 | 5.503606 | 4.390845 | 00:05 |

| 96 | 5.456139 | 4.370176 | 00:05 |

| 97 | 5.457549 | 4.359400 | 00:05 |

| 98 | 5.518940 | 4.356925 | 00:05 |

| 99 | 5.493828 | 4.356635 | 00:05 |

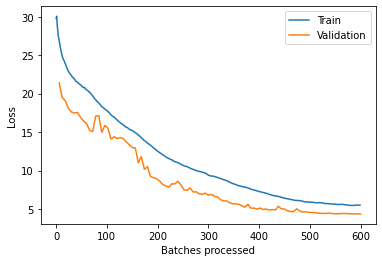

As we can see, the training and validation losses were decreasing until the 99th epoch, there could be room for more training.

detreg_model.plot_losses()

Visualize results in validation set

It is a good practice to see the results of the model viz-a-viz ground truth. The code below picks random samples and shows us ground truth and model predictions, side by side. This enables us to preview the results of the model we trained.

detreg_model.show_results(thresh=0.4)

Accuracy assessment

arcgis.learn provides the average_precision_score() method that computes the average precision of the model on the validation set for each class.

detreg_model.average_precision_score(){'palm': 0.9461714471808662}Save the model

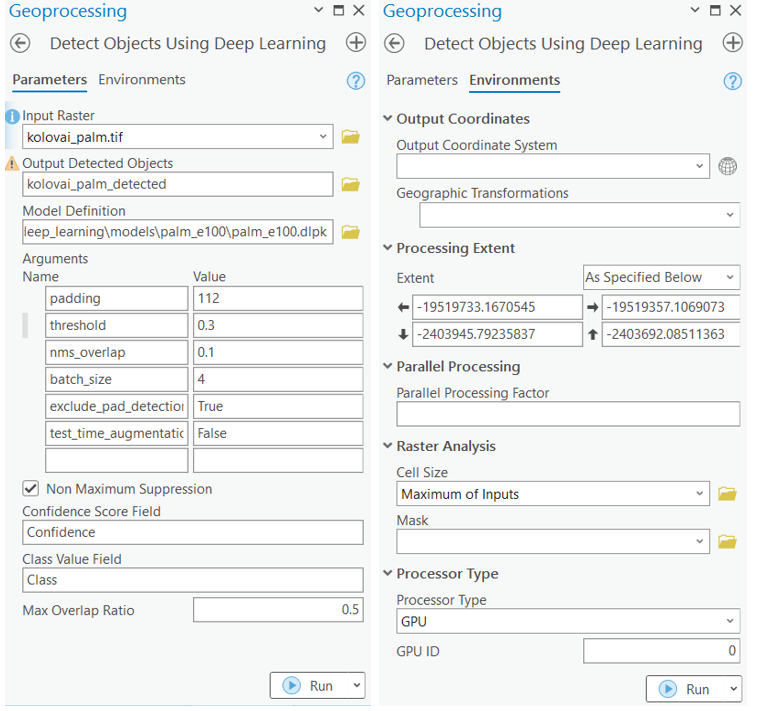

We will save the trained model as a 'Deep Learning Package' ('.dlpk' format). The Deep Learning package is the standard format used to deploy deep learning models on the ArcGIS platform.

We will use the save() method to save the trained model. By default, it will be saved to the 'models' sub-folder within our training data folder.

detreg_model.save('palm_e100', publish=True)Computing model metrics... Published DLPK Item Id: 9406080ccb6b499b9e2651c7b36f969d

WindowsPath('C:/Users/pri10421/AppData/Local/Temp/detecting_palm_trees_using_deep_learning/models/palm_e100')Detect palm trees with the trained deep learning model

The bulk of the work in extracting features from imagery is preparing the data, creating training samples, and training the model. Now that these steps have been completed, we'll use the trained model to detect palm trees in the desired imagery. Object detection is a process that typically requires multiple tests to achieve the best results. There are several parameters that you can alter to allow your model to perform best. To test these parameters quickly, we'll try detecting trees in a small section of the image. Once you're satisfied with the results, we'll extend the detection tools to the full image.

fc = gis.content.get('4ca014288f834385a7b97a2c4534b57d')

fc

Conclusion

In this notebook, we saw how we can use DetREG deep learning model and high-resolution satellite imagery to detect palm tree. This can be an important task for monitoring and conservation purposes. We used only handful of images as training data and trained a pretty good modl with DetREG available in arcgis.learn. We trained the deep learning model for a few iterations and then deployed it to detect all the palm trees in the Kolovai imagery. The results are highly accurate and almost all the palm trees in the region have been detected.