Prerequisites

- Please refer to the prerequisites section in our guide for more information. This sample demonstrates how to export training data and inference model using ArcGIS Pro. Alternatively, these can be done using ArcGIS Image Server as well.

- If you have already exported training samples using ArcGIS Pro, you can jump straight to the training section. The saved model can also be imported into ArcGIS Pro directly.

- This notebook requires ArcGIS API for Python version 1.8.1 or above.

Introduction

This notebook showcases an approach to performing land cover classification using sparse training data and multispectral imagery. We will demostrate the utility of methods including the imagery_type and ignore_classes available in arcgis.learn module to perform training.

1) imagery_type parameter: The prepare_data function allows us to use imagery with any number of bands (4-band NAIP imagery in our case). This can be done by passing the imagery_type parameter. For more details, see here.

2) ignore_classes parameter: The Segmentation or Pixel Classification model allows us to ignore one/more classes from the training data while training the model. We will ignore the 'NoData' class in order to train our model on sparse data.

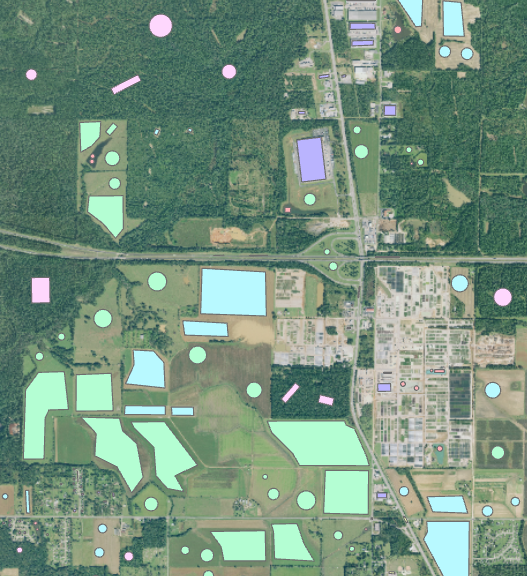

The image below shows a subset of our training data.

Note: The training data does not cover the whole image (which is a requirement in older version (v<1.8.1)), hence it is called sparse training data.

This notebook demonstrates capabilities of ArcGIS API for Python to classify a 4-band NAIP imagery given sparse training data.

Necessary imports

import os

from pathlib import Path

from arcgis.gis import GIS

from arcgis.learn import prepare_data, UnetClassifierConnect to your GIS

# Connect to GIS

gis = GIS('home')Export training data

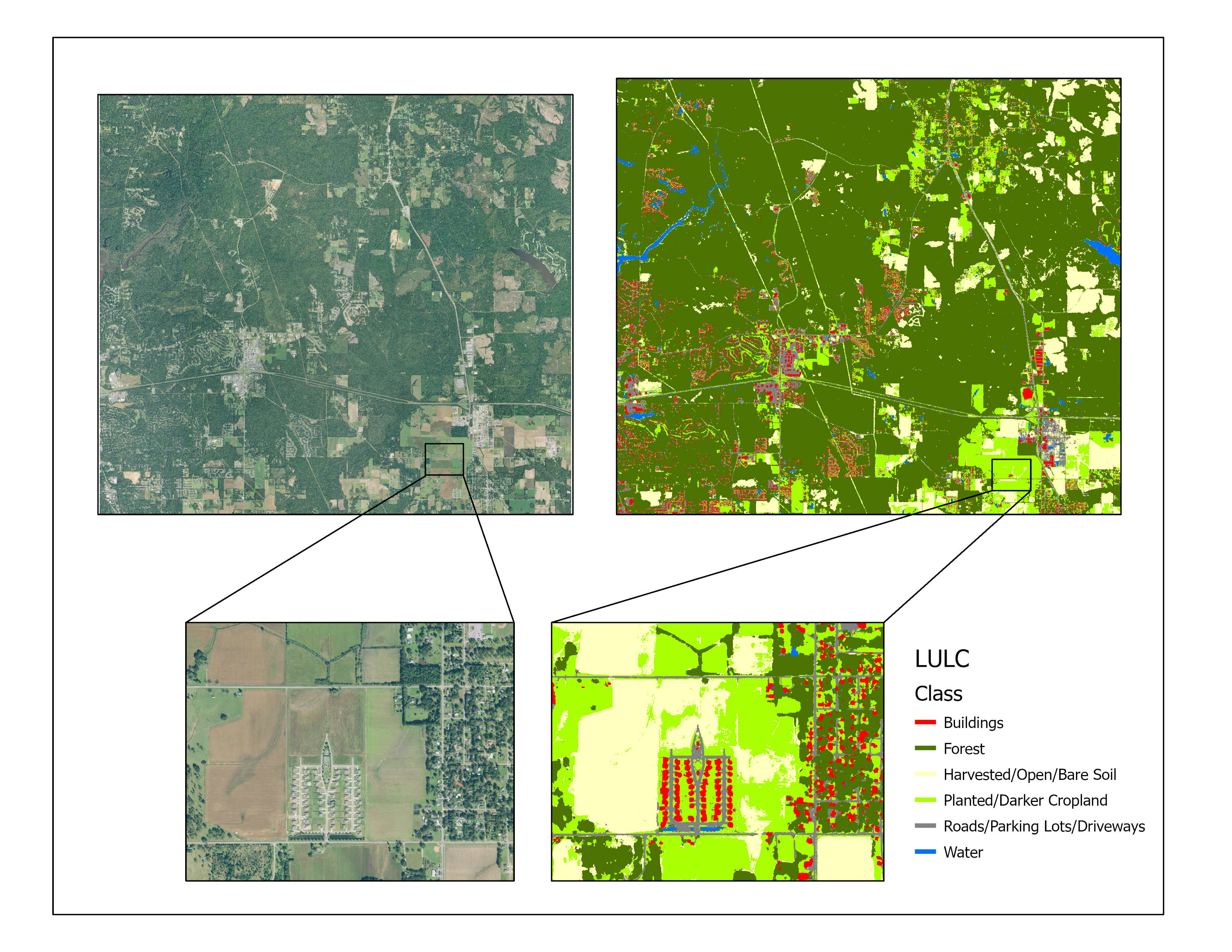

We have labelled a few training polygons in the northern regions of Alabama state. The training data has the polygons labelled for six land cover classes namely 'buildings', 'roads and parking lots', 'water', 'harvested, open and bare lands', 'forest' and 'planted crops'. We need to classify NAIP imagery against these land cover classes.

The feature class representing our labelled data contains an attribute field classvalue which represents the class of each labelled polygon. It is important to note here that all the pixels which are not labelled under any class will be mapped to 0, representing "NoData" class after exporting the data.

This is how it looks:

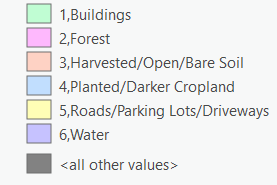

Training data can be exported by using the Export Training Data For Deep Learning tool available in ArcGIS Pro as well as ArcGIS Image Server.

Input Raster: NAIP imageryInput Feature Class Or Classified Raster: feature layer containing labeled polygonsClass Value Field: field containing class values against each classTile Size X & Tile Size Y: 256Stride X & Stride Y: 64Meta Data Format: 'Classified Tiles' as we are training an segmentation Model which in this case isUnetClassifier.Environments: Set optimumCell Size,Processing Extent.

The labels to export data using Export training data tool are available as a layer package.

labels = gis.content.get('eeec636c856740c6a3e2c185dda6e735')

labelsTrain the model

We will be using U-Net, one of the well-recognized image segmentation algorithms, for our land cover classification. U-Net is designed like an auto-encoder. It has an encoding path (“contracting”) paired with a decoding path (“expanding”) which gives it the “U” shape. However, in contrast to the autoencoder, U-Net predicts a pixelwise segmentation map of the input image rather than classifying the input image as a whole. For each pixel in the original image, it asks the question: “To which class does this pixel belong?”. U-Net passes the feature maps from each level of the contracting path over to the analogous level in the expanding path. These are similar to residual connections in a ResNet type model, and allow the classifier to consider features at various scales and complexities to make its decision.

Prepare data

We will specify the path to our training data and a few hyperparameters.

path: path of the folder containing training data.batch_size: Number of images your model will train on each step inside an epoch, it directly depends on the memory of your graphic card. 8 worked for us on a 11GB GPU.imagery_type: It is a mandatory input to enable a model for multispectral data processing. It can be "landsat8", "sentinel2", "naip", "ms" or "multispectral".

training_data = gis.content.get('08ccd71f862940bda1d8350c8cc91a47')

training_datafilepath = training_data.download(file_name=training_data.name)import zipfile

with zipfile.ZipFile(filepath, 'r') as zip_ref:

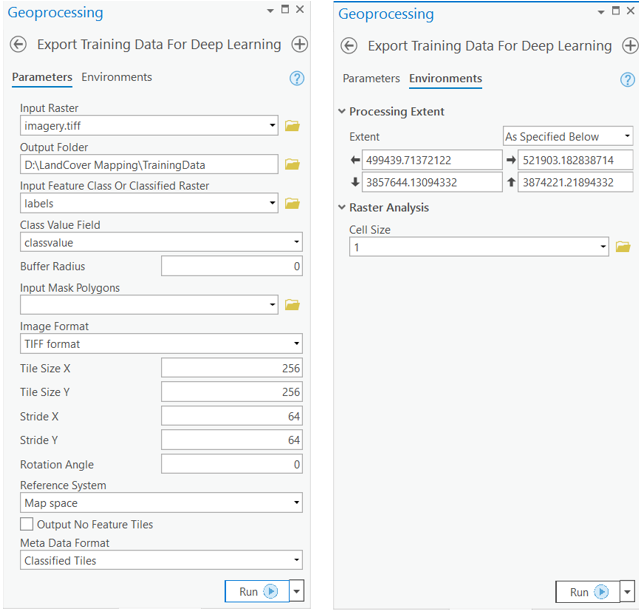

zip_ref.extractall(Path(filepath).parent)data_path = Path(os.path.join(os.path.splitext(filepath)[0]))data = prepare_data(data_path, batch_size=8, imagery_type='naip')Visualize training data

To get a sense of what the training data looks like, arcgis.learn.show_batch() method randomly picks a few training chips and visualizes them.

rows: Number of rows to visualize.

data.show_batch(rows=3)

Load model architecture

As we now know, the 'NoData' class is mapped to '0' and we want to train our model on the six classes we had labeled, so we can put the 'NoData' class in ignore_classes parameter while creating a U-Net model. This parameter allows the model to skip all the pixels belonging to the mentioned class/classes, in other words model will not get trained on that class/classes.

# Create U-Net Model

unet = UnetClassifier(data, backbone='resnet34', ignore_classes=[0])Find an optimal learning rate

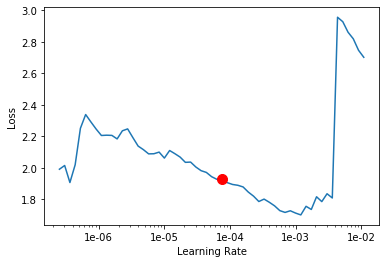

Learning rate is one of the most important hyperparameters in model training. ArcGIS API for Python provides a learning rate finder that automatically chooses the optimal learning rate for you.

unet.lr_find()

7.585775750291836e-05

Fit the model

We will train the model for a few epochs with the learning rate we have found. For the sake of time, we can start with 10 epochs.

unet.fit(10, 7.585775750291836e-05)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.204858 | 0.139798 | 0.951742 | 15:52 |

| 1 | 0.217454 | 0.129437 | 0.962800 | 15:55 |

| 2 | 0.180911 | 0.104669 | 0.971880 | 16:39 |

| 3 | 0.112541 | 0.087013 | 0.977520 | 16:04 |

| 4 | 0.189810 | 0.090733 | 0.973566 | 15:49 |

| 5 | 0.117280 | 0.081267 | 0.976252 | 15:48 |

| 6 | 0.111068 | 0.069316 | 0.981466 | 15:47 |

| 7 | 0.113275 | 0.090214 | 0.980469 | 15:47 |

| 8 | 0.093983 | 0.070652 | 0.982660 | 15:49 |

| 9 | 0.070856 | 0.067359 | 0.984696 | 15:50 |

Here, with only 10 epochs, we can see reasonable results — both training and validation losses have gone down considerably, indicating that the model is learning to classify land cover classes.

Accuracy assessment

As we have 6 different classes for this classification task, we need to do accuracy assessment for each of those. For that ArcGIS API for Python provides per_class_metrics function that will calculate precision and recall for each class.

unet.per_class_metrics()| Buildings | Forest | Harvested/Open/Bare Soil | Planted/Darker Cropland | Roads/Parking Lots/Driveways | Water | |

|---|---|---|---|---|---|---|

| precision | 0.910002 | 0.998542 | 0.986803 | 0.975038 | 0.951258 | 0.991753 |

| recall | 0.950265 | 0.999001 | 0.971385 | 0.987470 | 0.935559 | 0.978942 |

| f1_score | 0.929698 | 0.998772 | 0.979033 | 0.981215 | 0.943344 | 0.985306 |

Here, we can see the precision and recall values for each of the 6 classes are high with the model trained for just 10 epochs.

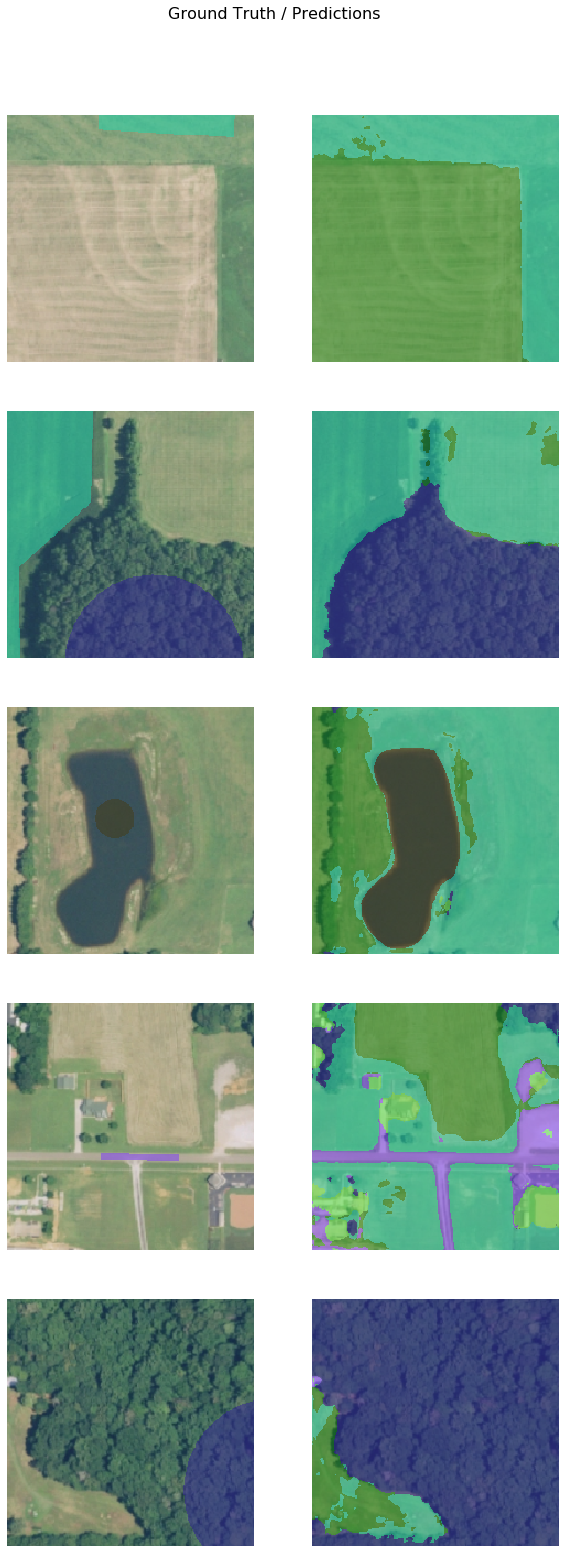

Visualize results in validation set

Its a good practice to see results of the model viz-a-viz ground truth. The code below picks random samples and shows us ground truth and model predictions, side by side. This enables us to preview the results of the model within the notebook.

unet.show_results()

Save the model

We will save the model which we trained as a 'Deep Learning Package' ('.dlpk' format). Deep Learning package is the standard format used to deploy deep learning models on the ArcGIS platform.

We will use the save() method to save the trained model. By default, it will be saved to the 'models' sub-folder within our training data folder.

unet.save('10e')Deployment and inference

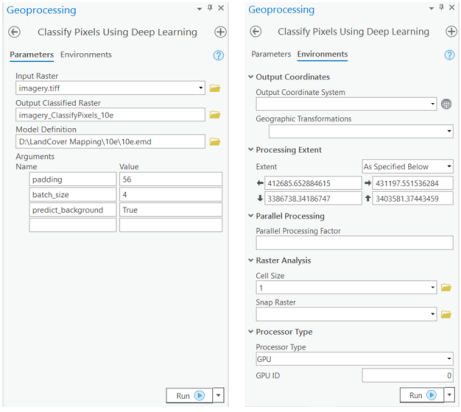

In this step, we will generate a classified raster using 'Classify Pixels Using Deep Learning' tool available in both ArcGIS Pro and ArcGIS Enterprise.

Input Raster: The raster layer you want to classify.Model Definition: It will be located inside the saved model in 'models' folder in '.emd' format.Padding: The 'Input Raster' is tiled and the deep learning model classifies each individual tile separately before producing the final 'Output Classified Raster'. This may lead to unwanted artifacts along the edges of each tile as the model has little context to predict accurately. Padding as the name suggests allows us to supply some extra information along the tile edges, this helps the model to predict better.Cell Size: Should be close to the size used to train the model. This was specified in the Export training data step.Processor Type: This allows you to control whether the system's 'GPU' or 'CPU' will be used to classify pixels, by 'default GPU' will be used if available.

Output of this tool will be a classified raster. Here below, you can see the NAIP image and its classified raster using the model we trained earlier.

Conclusion

This sample showcases how pixel classifcation models like UnetClassifier can be trained using sparsely labeled data. Additionally, the sample shows how you can use multispectral imagery containing more than just the RGB bands to train the model.