- 🔬 Data Science

- 🥠 Deep Learning and edge detection

Introduction

High-resolution remote sensing images provide useful spatial information for plot delineation; however, manual processing is time-consuming. Automatically extracting visible cadastral boundaries combined with (legal) adjudication and incorporation of local knowledge from human operators offers the potential to improve current cadastral mapping approaches in terms of time, cost, accuracy, and acceptance.

This sample shows how ArcGIS API for Python can be used to train a deep learning edge detection model to extract parcels from satellite imagery and thus more efficient approaches for cadastral mapping.

In this workflow we will basically have three steps.

- Export training data

- Train a model

- Deploy model and extract land parcels

Necessary imports

import os, zipfile

from pathlib import Path

import arcgis

from arcgis import GIS

from arcgis.learn import BDCNEdgeDetector, HEDEdgeDetector, prepare_dataConnect to your GIS

gis = GIS("home")

ent_gis = GIS('https://pythonapi.playground.esri.com/portal')Export land parcel boundaries data

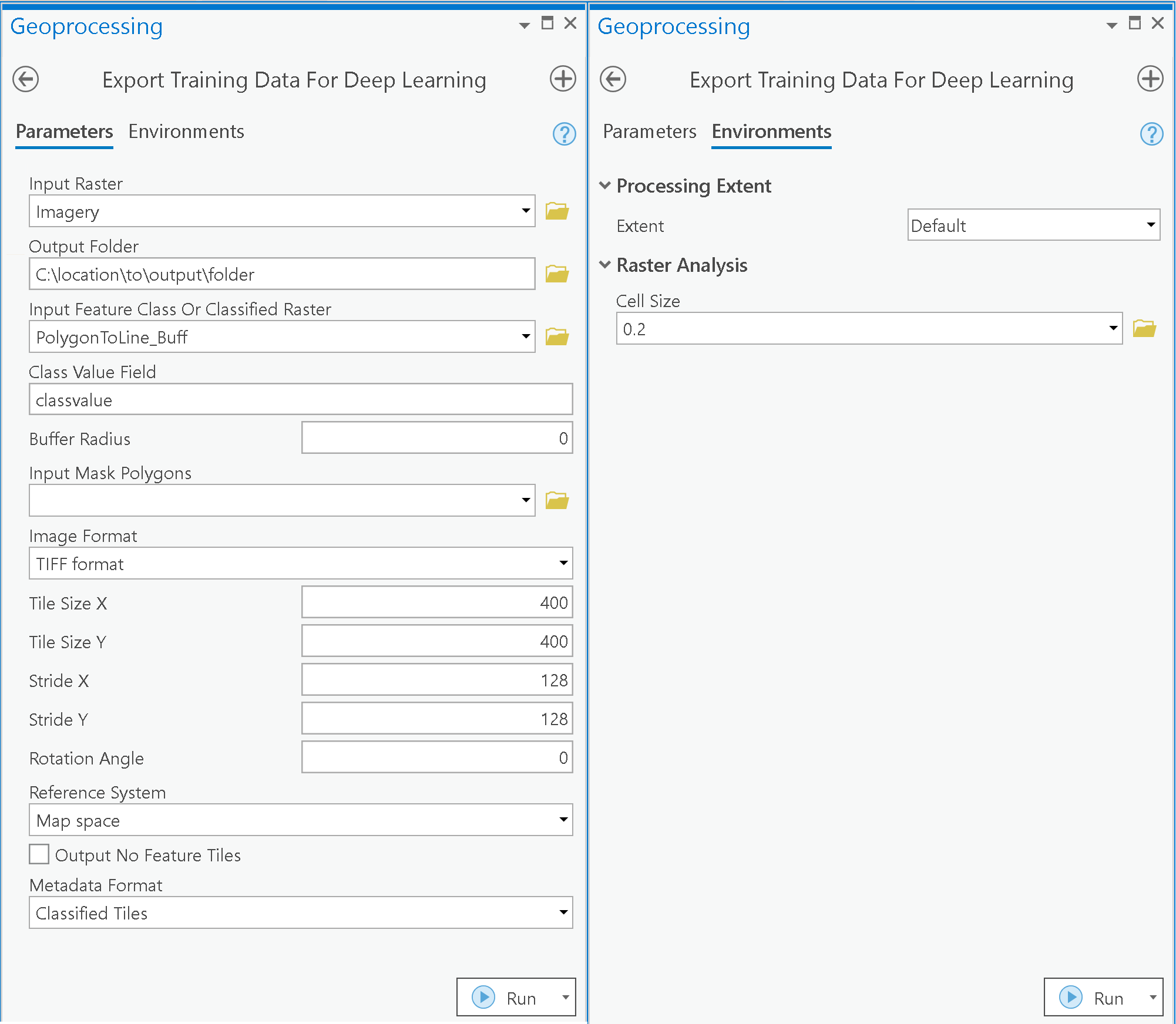

Training data can be exported by using the Export Training Data For Deep Learning tool available in ArcGIS Pro as well as ArcGIS Image Server. The training data consisted of polyline buffered feature class with a 'class' attribute. For this example, we prepared training data in Classified Tiles format using a chip_size of 400px and cell_size of 20cm in ArcGIS Pro.

Input Raster: Esri World ImageryInput Feature Class or Classified Raster: buffered polyline with class attributeClass Value Field: field in the attributes containing classTile Size X & Tile Size Y: 400Stride X & Stride Y: 128Reference System: Map spaceMeta Data Format: Classified tilesEnvironments: Set optimum Cell Size, Processing Extent

Raster and parcels data used for exporting the training dataset are provided below

training_area_raster = ent_gis.content.get('4f9d56b58e354aac9ed043355a4fa870')

training_area_rasterparcel_training_polygon = gis.content.get('ac0e639d6e9b43328605683efb37ff56')

parcel_training_polygon

arcpy.ia.ExportTrainingDataForDeepLearning("Imagery", r"C:\sample\Data\Training Data 400px 20cm", "land_parcels_training_buffered.shp", "TIFF", 400, 400, 128, 128, "ONLY_TILES_WITH_FEATURES", "Classified_Tiles", 0, None, 0, 0, "MAP_SPACE", "NO_BLACKEN", "Fixed_Size")

This will create all the necessary files needed for the next step in the 'Output Folder', and we will now call it our training data.

Prepare data

Alternatively, we have provided a subset of training data containing a samples below and the parcel training polygon used for exporting data. You can use the data directly to run the experiments.

training_data = gis.content.get('ab003694c99f4484a2d30df53f8b4d03')

training_datafilepath = training_data.download(file_name=training_data.name)import zipfile

with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)data_path = Path(os.path.join(os.path.splitext(filepath)[0]))We would specify the path to our training data and a few parameters.

path: path of folder containing training data.chip_size: Same as per specified while exporting training databatch_size: No of images your model will train on each step inside an epoch, it directly depends on the memory of your graphic card.

data = prepare_data(data_path, batch_size=2)Visualize a few samples from your training data

The code below shows a few samples of our data with the same symbology as in ArcGIS Pro.

rows: No of rows we want to see the results for.alpha: controls the opacity of labels(Classified imagery) over the drone imagery

data.show_batch(alpha=1)

Part 1 - Model training

Load BDCN or HED edge detector model architecture

There are two available edge detection models in arcgis.learn, the HEDEdgeDetector and BDCNEdgeDetector. If backbone is not specified, by default this model will be loaded on a pretrained ResNet type backbone.

#model = HEDEdgeDetector(data, backbone="vgg19")

#or

model = BDCNEdgeDetector(data, backbone="vgg19")List of supported backbones, that could be used during training.

model.supported_backbones['resnet18', 'resnet34', 'resnet50', 'resnet101', 'resnet152', 'vgg11', 'vgg11_bn', 'vgg13', 'vgg13_bn', 'vgg16', 'vgg16_bn', 'vgg19', 'vgg19_bn', 'timm:cspdarknet53', 'timm:cspresnet50', 'timm:cspresnext50', 'timm:densenet121', 'timm:densenet161', 'timm:densenet169', 'timm:densenet201', 'timm:densenetblur121d', 'timm:dla102', 'timm:dla102x', 'timm:dla102x2', 'timm:dla169', 'timm:dla34', 'timm:dla46_c', 'timm:dla46x_c', 'timm:dla60', 'timm:dla60_res2net', 'timm:dla60_res2next', 'timm:dla60x', 'timm:dla60x_c', 'timm:dm_nfnet_f0', 'timm:dm_nfnet_f1', 'timm:dm_nfnet_f2', 'timm:dm_nfnet_f3', 'timm:dm_nfnet_f4', 'timm:dm_nfnet_f5', 'timm:dm_nfnet_f6', 'timm:eca_nfnet_l0', 'timm:eca_nfnet_l1', 'timm:eca_nfnet_l2', 'timm:ecaresnet101d', 'timm:ecaresnet101d_pruned', 'timm:ecaresnet269d', 'timm:ecaresnet26t', 'timm:ecaresnet50d', 'timm:ecaresnet50d_pruned', 'timm:ecaresnet50t', 'timm:ecaresnetlight', 'timm:efficientnet_b0', 'timm:efficientnet_b1', 'timm:efficientnet_b1_pruned', 'timm:efficientnet_b2', 'timm:efficientnet_b2_pruned', 'timm:efficientnet_b3', 'timm:efficientnet_b3_pruned', 'timm:efficientnet_b4', 'timm:efficientnet_el', 'timm:efficientnet_el_pruned', 'timm:efficientnet_em', 'timm:efficientnet_es', 'timm:efficientnet_es_pruned', 'timm:efficientnet_lite0', 'timm:efficientnetv2_rw_m', 'timm:efficientnetv2_rw_s', 'timm:ese_vovnet19b_dw', 'timm:ese_vovnet39b', 'timm:fbnetc_100', 'timm:gernet_l', 'timm:gernet_m', 'timm:gernet_s', 'timm:gluon_resnet101_v1b', 'timm:gluon_resnet101_v1c', 'timm:gluon_resnet101_v1d', 'timm:gluon_resnet101_v1s', 'timm:gluon_resnet152_v1b', 'timm:gluon_resnet152_v1c', 'timm:gluon_resnet152_v1d', 'timm:gluon_resnet152_v1s', 'timm:gluon_resnet18_v1b', 'timm:gluon_resnet34_v1b', 'timm:gluon_resnet50_v1b', 'timm:gluon_resnet50_v1c', 'timm:gluon_resnet50_v1d', 'timm:gluon_resnet50_v1s', 'timm:gluon_resnext101_32x4d', 'timm:gluon_resnext101_64x4d', 'timm:gluon_resnext50_32x4d', 'timm:gluon_senet154', 'timm:gluon_seresnext101_32x4d', 'timm:gluon_seresnext101_64x4d', 'timm:gluon_seresnext50_32x4d', 'timm:gluon_xception65', 'timm:hardcorenas_a', 'timm:hardcorenas_b', 'timm:hardcorenas_c', 'timm:hardcorenas_d', 'timm:hardcorenas_e', 'timm:hardcorenas_f', 'timm:ig_resnext101_32x16d', 'timm:ig_resnext101_32x32d', 'timm:ig_resnext101_32x48d', 'timm:ig_resnext101_32x8d', 'timm:legacy_senet154', 'timm:legacy_seresnet101', 'timm:legacy_seresnet152', 'timm:legacy_seresnet18', 'timm:legacy_seresnet34', 'timm:legacy_seresnet50', 'timm:legacy_seresnext101_32x4d', 'timm:legacy_seresnext26_32x4d', 'timm:legacy_seresnext50_32x4d', 'timm:mobilenetv2_100', 'timm:mobilenetv2_110d', 'timm:mobilenetv2_120d', 'timm:mobilenetv2_140', 'timm:mobilenetv3_large_100', 'timm:mobilenetv3_large_100_miil', 'timm:mobilenetv3_large_100_miil_in21k', 'timm:mobilenetv3_rw', 'timm:nf_regnet_b1', 'timm:nf_resnet50', 'timm:nfnet_l0', 'timm:regnetx_002', 'timm:regnetx_004', 'timm:regnetx_006', 'timm:regnetx_008', 'timm:regnetx_016', 'timm:regnetx_032', 'timm:regnetx_040', 'timm:regnetx_064', 'timm:regnetx_080', 'timm:regnetx_120', 'timm:regnetx_160', 'timm:regnetx_320', 'timm:regnety_002', 'timm:regnety_004', 'timm:regnety_006', 'timm:regnety_008', 'timm:regnety_016', 'timm:regnety_032', 'timm:regnety_040', 'timm:regnety_064', 'timm:regnety_080', 'timm:regnety_120', 'timm:regnety_160', 'timm:regnety_320', 'timm:res2net101_26w_4s', 'timm:res2net50_14w_8s', 'timm:res2net50_26w_4s', 'timm:res2net50_26w_6s', 'timm:res2net50_26w_8s', 'timm:res2net50_48w_2s', 'timm:res2next50', 'timm:resnest101e', 'timm:resnest14d', 'timm:resnest200e', 'timm:resnest269e', 'timm:resnest26d', 'timm:resnest50d', 'timm:resnest50d_1s4x24d', 'timm:resnest50d_4s2x40d', 'timm:resnet101d', 'timm:resnet152d', 'timm:resnet18', 'timm:resnet18d', 'timm:resnet200d', 'timm:resnet26', 'timm:resnet26d', 'timm:resnet34', 'timm:resnet34d', 'timm:resnet50', 'timm:resnet50d', 'timm:resnet51q', 'timm:resnetrs101', 'timm:resnetrs152', 'timm:resnetrs200', 'timm:resnetrs270', 'timm:resnetrs350', 'timm:resnetrs420', 'timm:resnetrs50', 'timm:resnetv2_101x1_bitm', 'timm:resnetv2_101x1_bitm_in21k', 'timm:resnetv2_101x3_bitm', 'timm:resnetv2_101x3_bitm_in21k', 'timm:resnetv2_152x2_bit_teacher', 'timm:resnetv2_152x2_bit_teacher_384', 'timm:resnetv2_152x2_bitm', 'timm:resnetv2_152x2_bitm_in21k', 'timm:resnetv2_152x4_bitm', 'timm:resnetv2_152x4_bitm_in21k', 'timm:resnetv2_50x1_bit_distilled', 'timm:resnetv2_50x1_bitm', 'timm:resnetv2_50x1_bitm_in21k', 'timm:resnetv2_50x3_bitm', 'timm:resnetv2_50x3_bitm_in21k', 'timm:resnext101_32x8d', 'timm:resnext50_32x4d', 'timm:resnext50d_32x4d', 'timm:seresnet152d', 'timm:seresnet50', 'timm:seresnext26d_32x4d', 'timm:seresnext26t_32x4d', 'timm:seresnext50_32x4d', 'timm:skresnet18', 'timm:skresnet34', 'timm:skresnext50_32x4d', 'timm:ssl_resnet18', 'timm:ssl_resnet50', 'timm:ssl_resnext101_32x16d', 'timm:ssl_resnext101_32x4d', 'timm:ssl_resnext101_32x8d', 'timm:ssl_resnext50_32x4d', 'timm:swsl_resnet18', 'timm:swsl_resnet50', 'timm:swsl_resnext101_32x16d', 'timm:swsl_resnext101_32x4d', 'timm:swsl_resnext101_32x8d', 'timm:swsl_resnext50_32x4d', 'timm:tf_efficientnet_b0', 'timm:tf_efficientnet_b0_ap', 'timm:tf_efficientnet_b0_ns', 'timm:tf_efficientnet_b1', 'timm:tf_efficientnet_b1_ap', 'timm:tf_efficientnet_b1_ns', 'timm:tf_efficientnet_b2', 'timm:tf_efficientnet_b2_ap', 'timm:tf_efficientnet_b2_ns', 'timm:tf_efficientnet_b3', 'timm:tf_efficientnet_b3_ap', 'timm:tf_efficientnet_b3_ns', 'timm:tf_efficientnet_b4', 'timm:tf_efficientnet_b4_ap', 'timm:tf_efficientnet_b4_ns', 'timm:tf_efficientnet_b5', 'timm:tf_efficientnet_b5_ap', 'timm:tf_efficientnet_b5_ns', 'timm:tf_efficientnet_b6', 'timm:tf_efficientnet_b6_ap', 'timm:tf_efficientnet_b6_ns', 'timm:tf_efficientnet_b7', 'timm:tf_efficientnet_b7_ap', 'timm:tf_efficientnet_b7_ns', 'timm:tf_efficientnet_b8', 'timm:tf_efficientnet_b8_ap', 'timm:tf_efficientnet_el', 'timm:tf_efficientnet_em', 'timm:tf_efficientnet_es', 'timm:tf_efficientnet_l2_ns', 'timm:tf_efficientnet_l2_ns_475', 'timm:tf_efficientnet_lite0', 'timm:tf_efficientnet_lite1', 'timm:tf_efficientnet_lite2', 'timm:tf_efficientnet_lite3', 'timm:tf_efficientnet_lite4', 'timm:tf_efficientnetv2_b0', 'timm:tf_efficientnetv2_b1', 'timm:tf_efficientnetv2_b2', 'timm:tf_efficientnetv2_b3', 'timm:tf_efficientnetv2_l', 'timm:tf_efficientnetv2_l_in21ft1k', 'timm:tf_efficientnetv2_l_in21k', 'timm:tf_efficientnetv2_m', 'timm:tf_efficientnetv2_m_in21ft1k', 'timm:tf_efficientnetv2_m_in21k', 'timm:tf_efficientnetv2_s', 'timm:tf_efficientnetv2_s_in21ft1k', 'timm:tf_efficientnetv2_s_in21k', 'timm:tf_mobilenetv3_large_075', 'timm:tf_mobilenetv3_large_100', 'timm:tf_mobilenetv3_large_minimal_100', 'timm:tf_mobilenetv3_small_075', 'timm:tf_mobilenetv3_small_100', 'timm:tf_mobilenetv3_small_minimal_100', 'timm:tv_densenet121', 'timm:tv_resnet101', 'timm:tv_resnet152', 'timm:tv_resnet34', 'timm:tv_resnet50', 'timm:tv_resnext50_32x4d', 'timm:vgg11', 'timm:vgg11_bn', 'timm:vgg13', 'timm:vgg13_bn', 'timm:vgg16', 'timm:vgg16_bn', 'timm:vgg19', 'timm:vgg19_bn', 'timm:wide_resnet101_2', 'timm:wide_resnet50_2', 'timm:xception', 'timm:xception41', 'timm:xception65', 'timm:xception71']

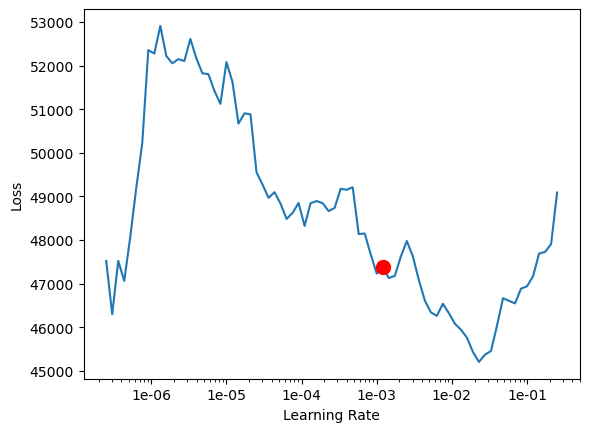

Tuning for optimal learning rate

Optimization in deep learning is all about tuning 'hyperparameters'. In this step, we will find an 'optimum learning rate' for our model on the training data. Learning rate is a very important parameter, while training our model it will see the training data several times and adjust itself (the weights of the network). Too high learning rate will lead to the convergence of our model to a suboptimal solution and too low learning can slow down the convergence of our model. We can use the lr_find() method to find an optimum learning rate at which can train a robust model fast enough.

# Find Learning Rate

lr = model.lr_find()

lr0.001202264434617413

Fit the model on the data

To start with let us first train the model for 30 epochs. One epoch means the model will see the complete training set once and so on. If you feel the results are not satisfactory we can train it further for more number of epochs.

model.fit(epochs=30, lr=lr)| epoch | train_loss | valid_loss | accuracy | f1_score | time |

|---|---|---|---|---|---|

| 0 | 42582.898438 | 39175.781250 | 0.737893 | 0.325295 | 01:55 |

| 1 | 40988.656250 | 36991.253906 | 0.807020 | 0.444670 | 01:52 |

| 2 | 38073.496094 | 34984.914062 | 0.707041 | 0.507575 | 01:51 |

| 3 | 37566.015625 | 34432.902344 | 0.757523 | 0.530217 | 01:52 |

| 4 | 37549.621094 | 33412.097656 | 0.722863 | 0.505099 | 01:53 |

| 5 | 37260.011719 | 32929.218750 | 0.742991 | 0.536895 | 01:52 |

| 6 | 38006.035156 | 33357.605469 | 0.720637 | 0.498926 | 01:55 |

| 7 | 36251.632812 | 31551.496094 | 0.789859 | 0.587750 | 01:53 |

| 8 | 37037.957031 | 31863.101562 | 0.786554 | 0.564862 | 01:52 |

| 9 | 37550.136719 | 30913.142578 | 0.758236 | 0.574408 | 01:53 |

| 10 | 34813.042969 | 32555.244141 | 0.771918 | 0.527013 | 01:53 |

| 11 | 35804.304688 | 31214.777344 | 0.733484 | 0.560567 | 01:54 |

| 12 | 33891.167969 | 30856.605469 | 0.796720 | 0.610350 | 01:55 |

| 13 | 35041.277344 | 30574.927734 | 0.771101 | 0.587610 | 01:53 |

| 14 | 34160.617188 | 30067.345703 | 0.811708 | 0.629174 | 01:53 |

| 15 | 32882.257812 | 29898.994141 | 0.796391 | 0.625271 | 01:54 |

| 16 | 34587.222656 | 29049.455078 | 0.771799 | 0.637549 | 01:54 |

| 17 | 31905.548828 | 29378.988281 | 0.794254 | 0.628917 | 01:54 |

| 18 | 32022.181641 | 28899.548828 | 0.814050 | 0.670002 | 01:53 |

| 19 | 33576.652344 | 28515.111328 | 0.820063 | 0.666266 | 01:54 |

| 20 | 33795.011719 | 28904.208984 | 0.825525 | 0.656705 | 01:53 |

| 21 | 33306.214844 | 27875.320312 | 0.800145 | 0.665329 | 01:53 |

| 22 | 32705.798828 | 27732.238281 | 0.800725 | 0.660144 | 01:55 |

| 23 | 32131.857422 | 27262.103516 | 0.814208 | 0.686642 | 01:53 |

| 24 | 31662.511719 | 27413.988281 | 0.811471 | 0.679683 | 01:54 |

| 25 | 32780.769531 | 27053.285156 | 0.808599 | 0.680979 | 01:55 |

| 26 | 33761.828125 | 27310.287109 | 0.817889 | 0.680208 | 01:53 |

| 27 | 32652.843750 | 27059.832031 | 0.816694 | 0.687203 | 01:54 |

| 28 | 32235.031250 | 27053.460938 | 0.818225 | 0.687486 | 01:54 |

| 29 | 32614.832031 | 27066.529297 | 0.817210 | 0.685988 | 01:55 |

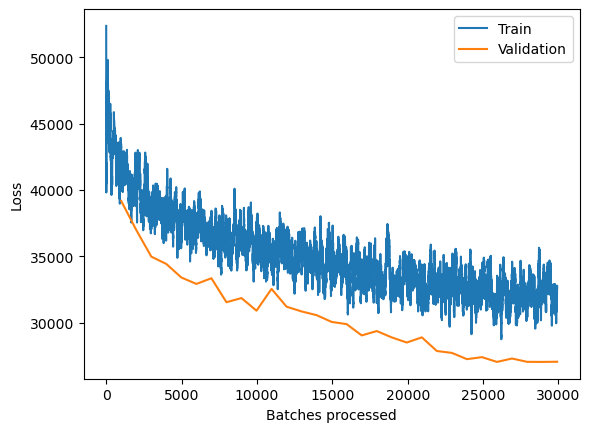

Plot losses

model.plot_losses()

Visualize results

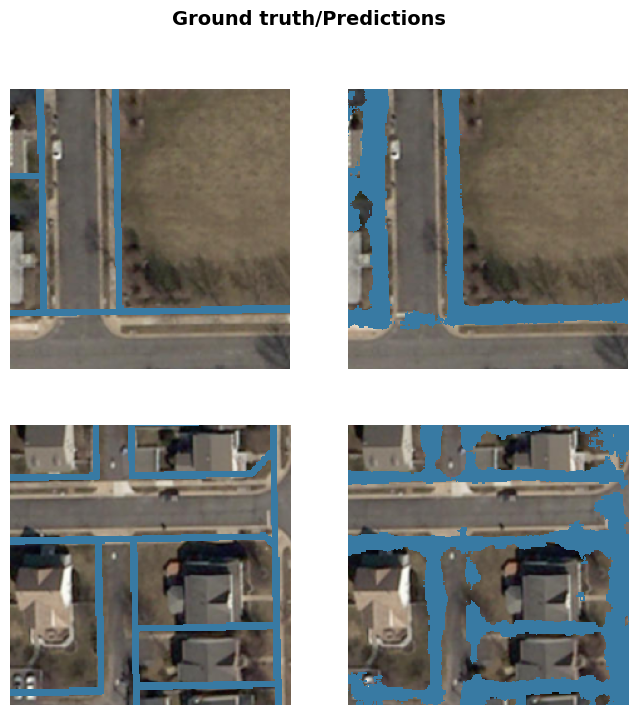

The code below will pick a few random samples and show us ground truth and respective model predictions side by side. This allows us to preview the results of your model in the notebook itself, once satisfied we can save the model and use it further in our workflow.

We have few parameters for visualization.

alpha: controls the opacity of predicted edges. Set to 1.thinning: Its a post-processsing parameters, which thins or skeletonizes the predicted edges. We will be using our own pre-processing workflow build in ArcGIS pro hence, will set it to False. As per results, this could also be set to True/False during inferencing in pro.

model.show_results(alpha=1,thinning=False)

Save the model

We would now save the model which we just trained as a Deep Learning Package or .dlpk format. Deep Learning package is the standard format used to deploy deep learning models on the ArcGIS platform. For this sample, we will be using this model in ArcGIS Pro to extract land parcels.

We will use the save() method to save the model and by default, it will be saved to a folder 'models' inside our training data folder.

model.save("edge_model_e30")Computing model metrics...

PosixPath('/tmp/data_for_edge_detection_model/models/edge_model_e30')We can observe some room for further improvement, so we trained the model for further 70 epochs. The deployment and results from the model trained for 100 epochs are shown upcoming section.

Part 2 - Deploying model, and extraction of parcels in imagery at scale

The deep learning package saved in the previous step can be used to extract classified raster using the Classify Pixels Using Deep Learning tool. Further, the classified raster is regularised and finally converted to a vector Polygon layer. The post-processing steps use advanced ArcGIS geoprocessing tools to remove unwanted artifacts in the output.

As the model has been trained on a limited amount of data, it is expected to only work well in nearby areas and similar geographies.

Generate a classified raster using classify pixels using deep learning tool

We will use the saved model to detect objects using the Classify pixels Using Deep Learning tool available in ArcGIS Pro as well as ArcGIS Image Server. For this sample, we will use world imagery to delineate boundaries.

Raster used for testing the model is provided below

test_area_raster = ent_gis.content.get('ed2951a19296485d9334460f343c5a0c')

test_area_raster

out_classified_raster = arcpy.ia.ClassifyPixelsUsingDeepLearning("Imagery", r"C:\Esri_project\edge_detection_ready_to_use_model\models\edge_model_e30\edge_model_e100.emd", "padding 56;batch_size 4;thinning False"); out_classified_raster.save(r"C:\sample\sample.gdb\edge_detected")

Output of this tool will be in form of a 'classified raster' containing both background and edges. For better visualization, the predicted raster is provided as a web map https://pythonapi.playground.esri.com/portal/home/webmap/viewer.html?webmap=35560c83171f4742847cdeffb813bad9&extent=-77.5235,39.0508,-77.4729,39.0712

A subset of predictions by trained model

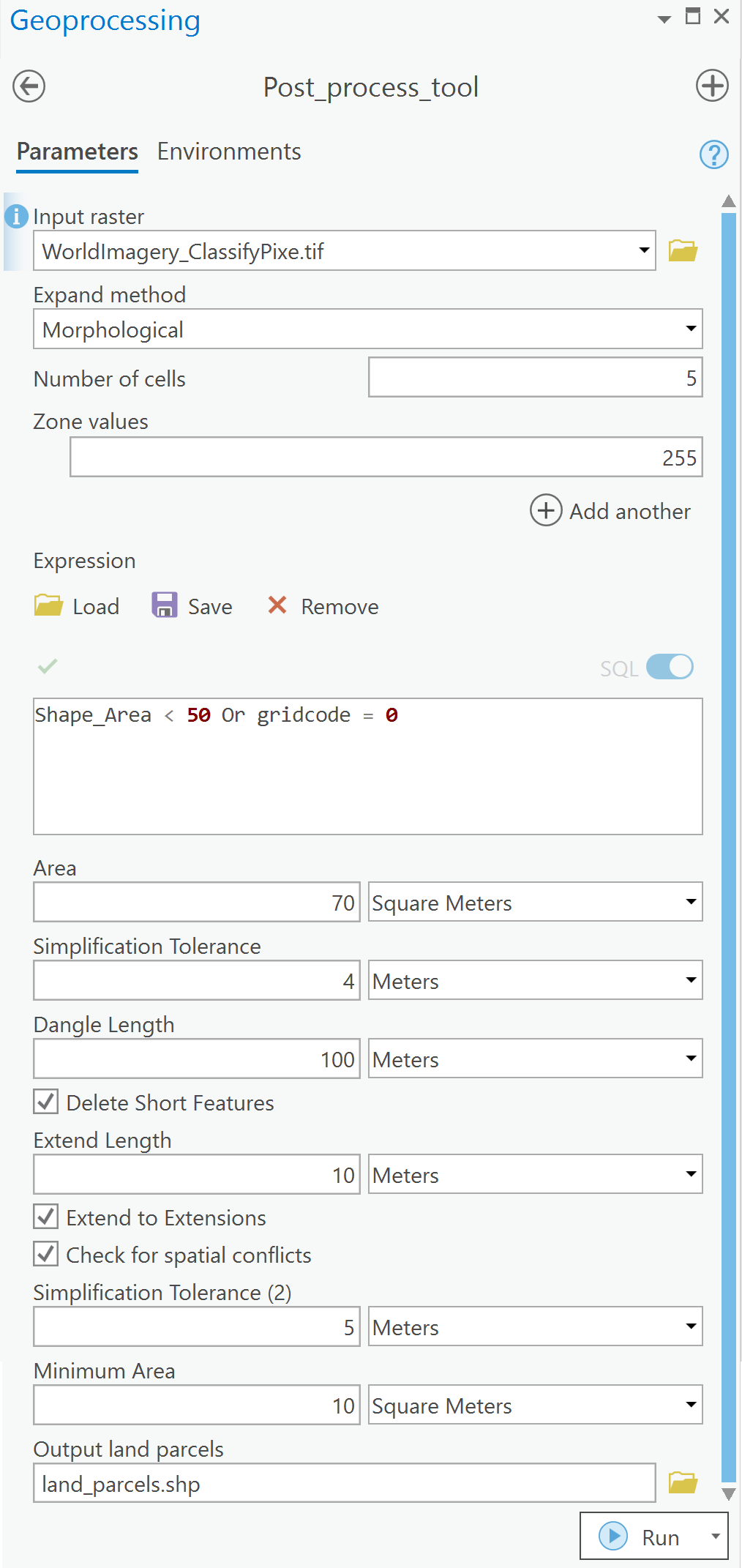

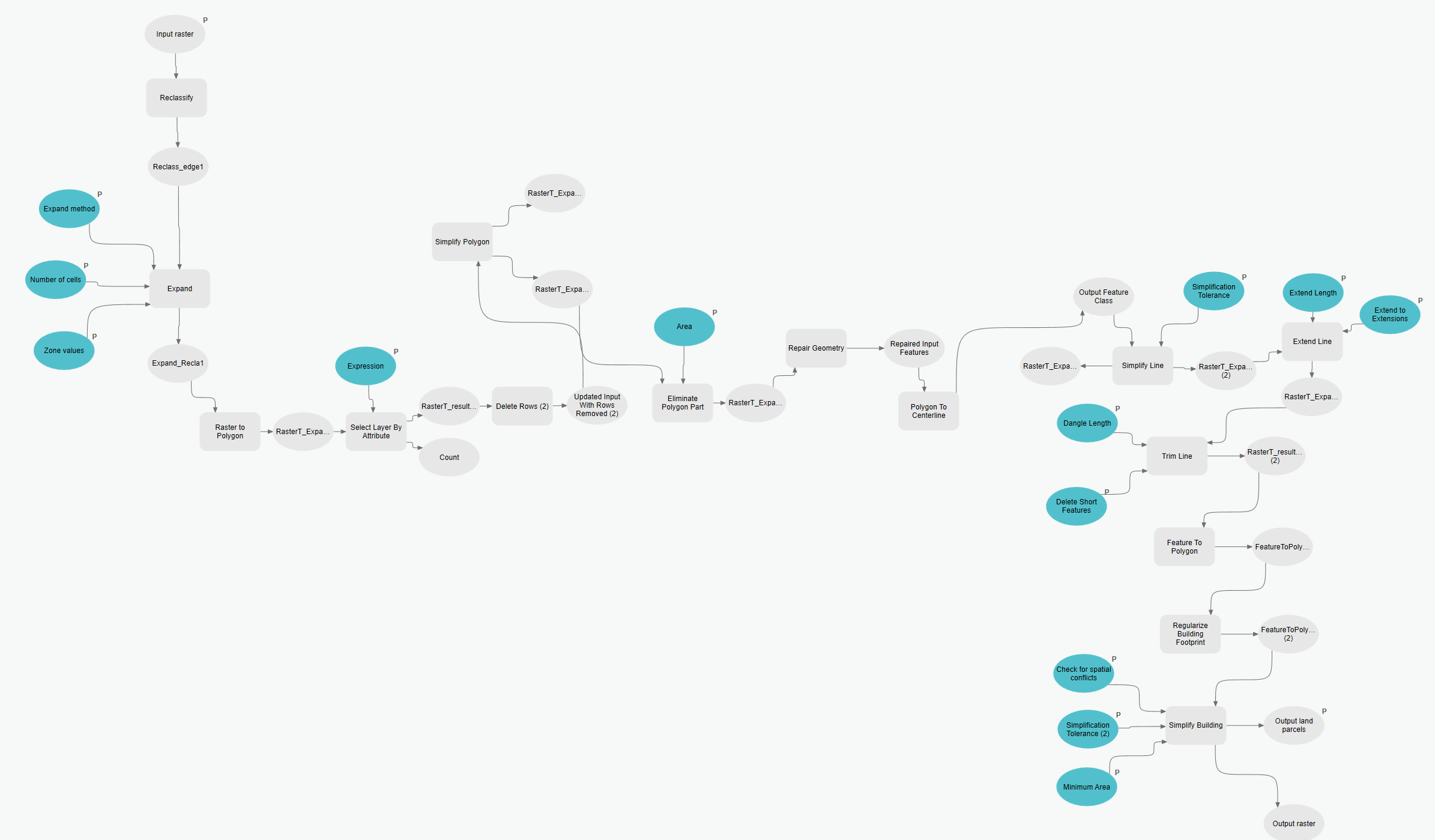

Post-processing workflow using modelbuilder

As postprocessing workflow below involves geoprocessing tools and the parameters set in these tools need to be experimented with to get optimum results. We would use model builder to build this workflow for us and which will enable us to iteratively change the parameters in this workflow. The Input raster should be a predicted reclassified raster with 0-255 range pixel value.

Tools used and important parameters:

Input raster: Predicted reclassified raster with 0-255 range pixel value. where 0:background and 255:edge.Expand: Expands specified zones of a raster by a specified number of cells. default value set for number of cells to 3.Raster to polygon: Vectorizes raster to polygon based on cell values, we will keep the 'Simplify Polygons' and 'Create Multipart Features' options unchecked.Select by attribute: Adds, updates, or removes a selection based on an attribute query. We try to filter out polygons with smaller area.Simplify polygon: Simplifies polygon outlines by removing relatively extraneous vertices while preserving essential shape. Simplification tolerance was set to 0.1.Eliminate polygon parts: Creates a new output feature class containing the features from the input polygons with some parts or holes of a specified size deleted. 'Condition' was set to 'area'.Polygon to centerline: Creates centerlines from polygon features.Simplify line: Simplifies lines by removing relatively extraneous vertices while preserving essential shape. Simplification tolerance was set to 4.Trim line: Removes portions of a line that extend a specified distance past a line intersection (dangles). Dangle length was set to 100 and delete short features.Feature to polygon: Creates a feature class containing polygons generated from areas enclosed by input line or polygon features.Regularize building footprints: This tool will produce the final finished results by shaping them to how actual buildings look. 'Method' for regularisation would be 'Right Angles and Diagonal' and the parameters 'tolerance', 'Densification' and 'Precision' are '1.5', '1', '0.25' and '2' respectively.Simplify building: Simplifies the boundary or footprint of building polygons while maintaining their essential shape and size.

Attached below is the toolbox:

gis.content.get('9c8939622d954528916e0d6ffda4065d')

Post-processing workflow in model builder

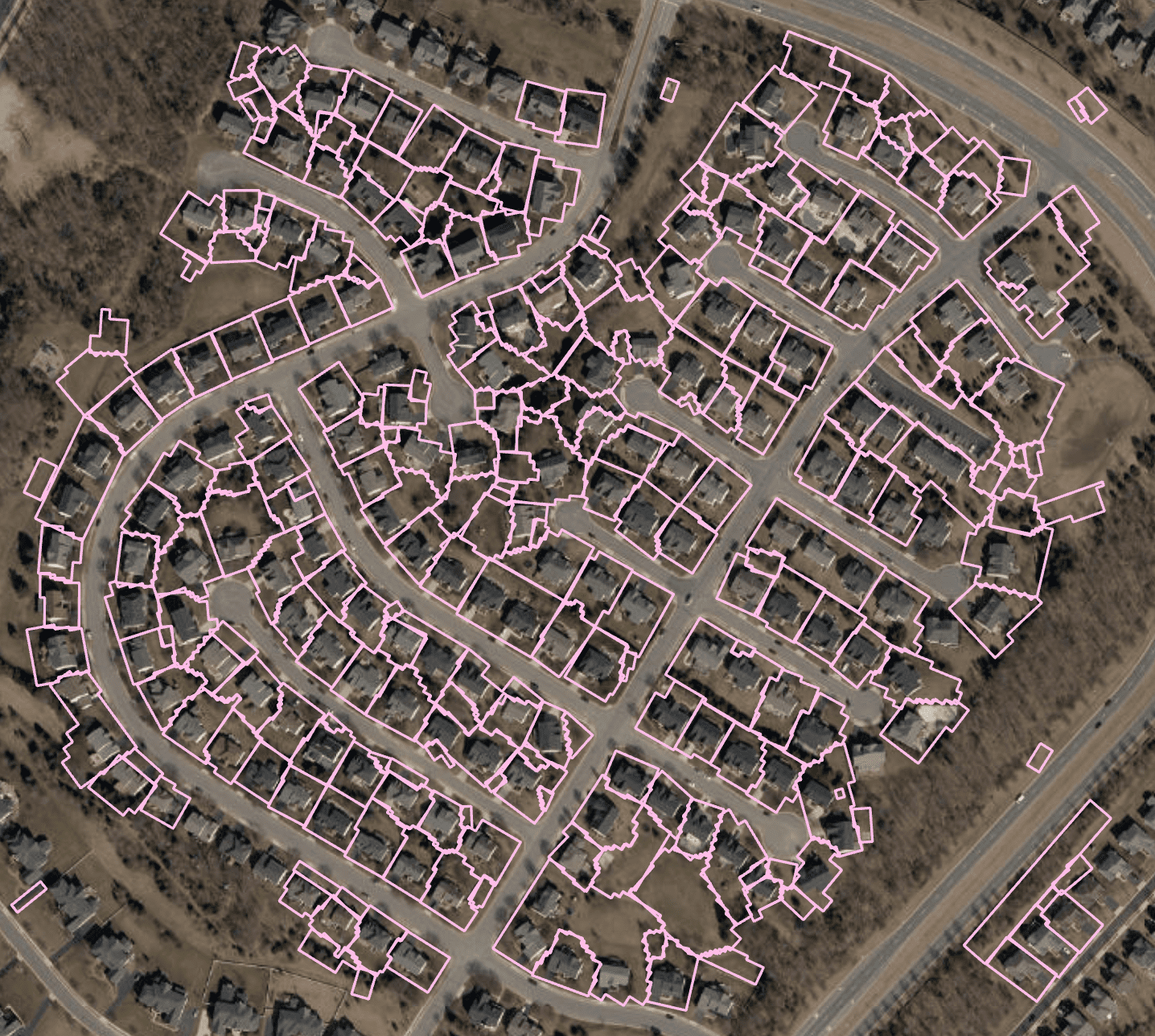

Final output

The final output will be in form of a feature class. A link to the web map is provided for better visualization of extracted parcels. Provided is a map for better visualization https://arcg.is/1u4LSX0.

gis.content.get('f79515de95254eda83a0d3bc331bdcb7')

A few subsets of results overlayed on the imagery

References

[1] He, Jianzhong, Shiliang Zhang, Ming Yang, Yanhu Shan, and Tiejun Huang. "Bi-directional cascade network for perceptual edge detection.", 2019; [https://arxiv.org/abs/1902.10903 arXiv:1902.10903v1].