Augmented reality (AR) experiences can be implemented with three common patterns: tabletop, flyover, and world-scale.

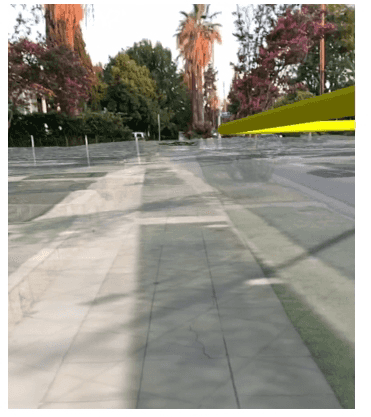

- Flyover – With flyover AR you can explore a scene using your device as a window into the virtual world. A typical flyover AR scenario starts with the scene’s virtual camera positioned over an area of interest. You can walk around and reorient the device to focus on specific content in the scene.

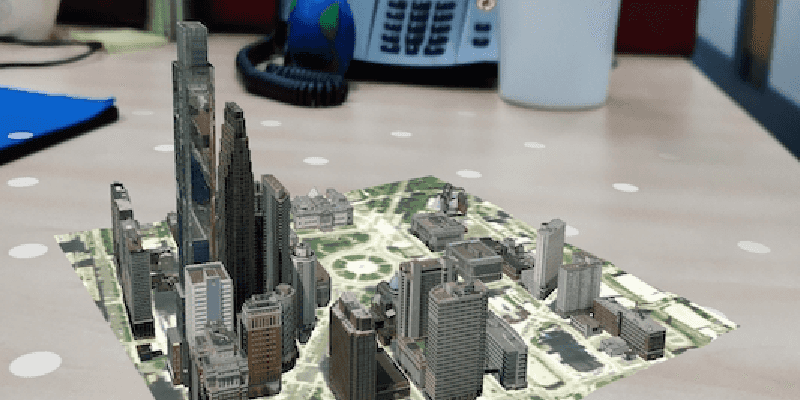

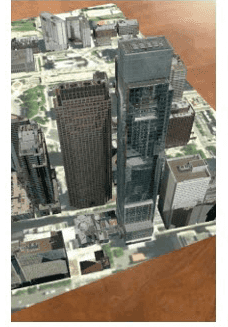

- Tabletop – Tabletop AR provides scene content anchored to a physical surface, as if it were a 3D-printed model. You can walk around the tabletop and view the scene from different angles.

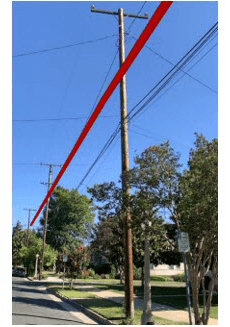

- World-scale – A kind of AR scenario where scene content is rendered exactly where it would be in the physical world. This is used in scenarios ranging from viewing hidden infrastructure to displaying waypoints for navigation. In AR, the real world, rather than a basemap, provides the context for your GIS data.

| Flyover | Tabletop | World-scale |

|---|---|---|

|

|

|

| On screen, flyover is visually indistinguishable from normal scene rendering. | In tabletop, scene content is anchored to a real-world surface. | In world-scale AR, scene content is integrated with the real world. |

Support for augmented reality is provided through components available in the ArcGIS Maps SDK for Qt Toolkit.

Enable your app for AR

- See the Toolkit repo on GitHub for the latest instructions for installing.

- Add an AR view to your app.

- Configure privacy and permissions.

- Now you're ready to add tabletop AR, add flyover AR, or add world-scale AR to your app.

Add an AR view to your app

ArcGIS uses an underlying ARKit or ARCore view and a SceneView.

Use the following methods on ArcGIS to configure AR:

translation- controls the relationship between physical device position changes and changes in the position of the scene view's camera. This is useful for tabletop and flyover AR.Factor origin- controls the initial position of the scene view's camera. When position tracking is started,Camera ArcGIStransforms the scene view camera's position using a transformation matrix provided by ARKit or ARCore. Once the origin camera is set, the manipulation of the scene view's camera is handled automatically.Ar View set– takes a point on the screen, finds the surface represented by that point, and applies a transformation such that the origin camera is pinned to the location represented by that point. This is useful for pinning content to a surface, which is needed for tabletop AR.Initial Transformation

In addition to the toolkit, you'll need to use the following features provided by the underlying scene view when creating AR experiences:

- Scene view space effect control — Disable rendering the 'starry sky' effect to display scene content on top of a camera feed.

- Scene view atmosphere effect control — Disable rendering the atmosphere effect to avoid obscuring rendered content.

- Surface transparency — Hide the ground when rendering world-scale AR because the camera feed, not the basemap, is providing context for your GIS content. You can use a semitransparent surface to calibrate your position in world-scale AR.

- Scene view navigation constraint — By default, scene views constrain the camera to being above the ground. You should disable this feature to enable users to use world-scale AR underground (for example, while in a basement). The navigation constraint will interfere with tabletop AR if the user attempts to look at the scene from below.

To use ArcGIS, first add it to the view (typically the .qml file that defines the GUI components for your app), then configure the lifecycle methods to start and stop tracking as needed.

import Esri.ArcGISArToolkit 1.0

Item {

ArcGISArView {

id: arcGISArView

anchors.fill: parent

sceneView: view

tracking: true

}

SceneView {

id: view

anchors.fill: parent

Component.onCompleted: {

// Set and keep the focus on SceneView to enable keyboard navigation

forceActiveFocus();

}

}

Rectangle {

anchors {

bottom: view.attributionTop

horizontalCenter: parent.horizontalCenter

margins: 5

}

width: childrenRect.width

height: childrenRect.height

color: "#88ffffff" // transparent white

radius: 5

visible: model.dialogVisible

Text {

anchors.centerIn: parent

font.bold: true

text: qsTr("Touch screen to place the tabletop scene...")

}

}

// Declare the C++ instance which creates the scene etc. and supply the view

ARSample {

id: model

arcGISArView: arcGISArView

sceneView: view

}

}Configure privacy and permissions

Before you can use augmented reality, you'll need to request location and camera permissions.

On iOS, ensure the following properties are set in info.plist:

Privacy - Camera Usage DescriptionPrivacy - Location When In Use Usage Description

The deployment target should be set to a supported version of iOS (see System requirements for details).

If you’d like to restrict your app to installing only on devices that support ARKit, add arkit to the required device capabilities section of Info.plist:

<key>UIRequiredDeviceCapabilities</key>

<array>

<string>arkit</string>

</array>On Android, you'll need to request camera and location permissions before using ARCore. Ensure that the following permissions are specified in AndroidManifest.xml :

<!-- Location service is used for full-scale AR where the current device location is required -->

<uses-permission android:name="android.permission.ACCESS_FINE_LOCATION" />

<!-- Both "AR Optional" and "AR Required" apps require CAMERA permission. -->

<uses-permission android:name="android.permission.CAMERA" />

When starting the AR experience, ensure that the user has granted permissions. See Qt documentation for details.

Note that the device must support ARCore for ArcGIS to work. Google maintains a list of supported devices. ARCore is a separate installable component delivered via Google Play.

Add the following to the application definition in AndroidManifest.xml to ensure ARCore is installed with your app. You can specify optional or required depending on whether your app should work when ARCore is not present. The toolkit defines this metadata as optional automatically. When requiring ARCore, you’ll need to add tools to the metadata declaration because the Toolkit has already specified a value.

<application ...>

<!-- Indicates that app requires ARCore ("AR Required"). Causes Google

Play Store to download and install ARCore along with the app.

For an "AR Optional" app, specify "optional" instead of "required" -->

<meta-data android:name="com.google.ar.core" android:value="required" />

</application>

The following declaration (outside of the application element) will ensure that the app only displays in the Play Store if the device supports ARCore:

<!-- Indicates that app requires ARCore ("AR Required"). Ensures app is

only visible in the Google Play Store on devices that support ARCore.

For "AR Optional" apps remove this line. -->

<uses-feature android:name="android.hardware.camera.ar" android:required="true" />

Once you have installed the toolkit, configured your app to meet privacy requirements, requested location permissions, and added an ArcGIS to your app, you can begin implementing your AR experience.

Understand Common AR Patterns

There are many AR scenarios you can achieve. This SDK recognizes the following common patterns for AR:

- Flyover – Flyover AR is a kind of AR scenario that allows you to explore a scene using your device as a window into the virtual world. A typical flyover AR scenario will start with the scene’s virtual camera positioned over an area of interest. You can walk around and reorient the device to focus on specific content in the scene.

- Tabletop – A kind of AR scenario where scene content is anchored to a physical surface, as if it were a 3D-printed model. You can walk around the tabletop and view the scene from different angles.

- World-scale – A kind of AR scenario where scene content is rendered exactly where it would be in the physical world. This is used in scenarios ranging from viewing hidden infrastructure to displaying waypoints for navigation. In AR, the real world, rather than a basemap, provides the context for your GIS data.

Each experience is built using a combination of the features, the toolkit, and some basic behavioral assumptions. For example:

| AR pattern | Origin camera | Translation factor | Scene view | Base surface |

|---|---|---|---|---|

| Flyover AR | Above the tallest content in the scene | A large value to enable rapid traversal; 0 to restrict movement | Space effect: Stars Atmosphere: Realistic | Displayed |

| Tabletop AR | On the ground at the center or lowest point on the scene | Based on the size of the target content and the physical table | Space effect: Transparent Atmosphere: None | Optional |

| World-scale AR | At the same location as the physical device camera | 1, to keep virtual content in sync with real-world environment | Space effect: Transparent Atmosphere: None | Optional for calibration |

Add tabletop AR to your app

Tabletop AR allows you to use your device to interact with scenes as if they were 3D-printed models sitting on your desk. You could, for example, use tabletop AR to virtually explore a proposed development without needing to create a physical model.

Implement tabletop AR

Tabletop AR often allows users to place scene content on a physical surface of their choice, such as the top of a desk, for example. Once the content is placed, it stays anchored to the surface as the user moves around it.

-

Create an

ArcGISand add it to the view (typically the .qml file that defines the GUI components for your app).Ar View -

Now switch over to your apps .cpp file to make edits. Once the user has tapped a point, call

set. The toolkit will use the native platform’s plane detection to position the virtual camera relative to the plane. If the result isInitial Transformation true, the transformation has been set successfully and you can place the scene.Use dark colors for code blocks Copy // Set the initial transformation using the point clicked on the screen. const QPoint screenPoint = event.position().toPoint(); m_arcGISArView->setInitialTransformation(screenPoint); -

Create and display the scene. For demonstration purposes, this code uses the Philadelphia mobile scene package because it is particularly well-suited for tabletop display. You can download that .mspk and add it to your project to make the code below work. Alternatively, you can use any scene for tabletop mapping, but be sure to define a clipping distance for a proper tabletop experience.

Use dark colors for code blocks Copy // Create scene package and connect to signals void AR::createScenePackage(const QString& path) { // Create the mobile scene package from the path provide. m_scenePackage = new MobileScenePackage(path, this); // Call the mobile scene package done loading event. connect(m_scenePackage, &MobileScenePackage::doneLoading, this, &AR::packageLoaded); // Load the mobile scene package. m_scenePackage->load(); } // Slot for handling when the package loads void AR::packageLoaded(const Error& e) { // Test if these is an error and if there are some, display the details to the user. if (!e.isEmpty()) { qDebug() << QString("Package load error: %1 %2").arg(e.message(), e.additionalMessage()); return; } // Test if the QList of scenes in the mobile scene package are empty. if (m_scenePackage->scenes().isEmpty()) return; // Get the first scene in the mobile scene package. m_scene = m_scenePackage->scenes().at(0); // Create a camera at the bottom and center of the scene. // This camera is the point at which the scene is pinned to the real-world surface. m_originCamera = Camera(39.95787000283599, -75.16996728256345, 8.813445091247559, 0, 90, 0); } -

Find an anchor point in the scene. You can use a known value, a user-selected value, or a computed value. For simplicity, this example uses a known value. Place the origin camera at that point. Set the navigation constraint on the scene’s base surface to

None.Use dark colors for code blocks Copy // Set the origin camera. m_arcGISArView->setOriginCamera(m_originCamera); // Enable subsurface navigation. This allows you to look at the scene from below. m_sceneView->arcGISScene()->baseSurface()->setNavigationConstraint(NavigationConstraint::None); -

Set the translation factor on the ArcGIS AR view so that the whole scene can be viewed by moving around it. A useful formula for determining this value is translation factor = virtual content width / desired physical content width. The desired physical content width is the size of the physical table while virtual content width is the real-world size of the scene content; both measurements should be in meters. You can set the virtual content width by setting a clipping distance.

Use dark colors for code blocks Copy // Set the translation factor based on the scene content width and desired physical size. m_arcGISArView->setTranslationFactor(m_sceneWidth/m_tableTopWidth);

Add flyover AR to your app

Flyover AR displays a scene while using the movement of the physical device to move the scene view camera. For example, you can walk around while holding up your device as a window into the scene. Unlike other AR experiences, the camera feed is not displayed to the user, making flyover more similar to a traditional virtual reality (VR) experience.

Flyover is the simplest AR scenario to implement, as there is only a loose relationship between the physical world and the rendered virtual world. With flyover, you can imagine your device as a window into the virtual scene.

Implement flyover AR

-

Create the AR view and add it to the UI (typically the .qml file that defines the GUI components for your app).

-

Now switch over to your apps .cpp file to make edits. Create the scene, add any content, then display it. This example uses an integrated mesh layer.

Use dark colors for code blocks Copy ExploreScenesInFlyoverAR::ExploreScenesInFlyoverAR(QObject *parent /* = nullptr */): QObject{parent}, m_scene(new Scene(BasemapStyle::ArcGISImagery, this)) { // Define the Url to an ArcGIS tiled image service. const QUrl arcGISTiledImageServiceUrl = QUrl("https://elevation3d.arcgis.com/arcgis/rest/services/WorldElevation3D/Terrain3D/ImageServer"); // Create a new ArcGIS tiled elevation source from the Url. ArcGISTiledElevationSource* arcGISTiledElevationSource = new ArcGISTiledElevationSource(arcGISTiledImageServiceUrl, this); // Get the base surface from the scene. Surface* baseSurface = m_scene->baseSurface(); // Get the elevation source list model from the base surface. ElevationSourceListModel* elevationSourceListModel = baseSurface->elevationSources(); // Add the ArcGIS tiled elevation source to the elevation source list model. elevationSourceListModel->append(arcGISTiledElevationSource); // Set the opacity on the base surface. baseSurface->setOpacity(0.0f); // Define the Url to an integrated mesh layer. const QUrl meshLyrUrl("https://tiles.arcgis.com/tiles/u0sSNqDXr7puKJrF/arcgis/rest/services/Frankfurt2017_v17/SceneServer/layers/0"); // Create a new integrated mesh layer from the Url. m_integratedMeshLayer = new IntegratedMeshLayer(meshLyrUrl, this); // Get the layer list model from the scene's operational layers. LayerListModel* layerListModel = m_scene->operationalLayers(); // Add the integrated mesh layer to the layer list model. layerListModel->append(m_integratedMeshLayer); -

Place the origin camera above the content you want the user to explore, ideally in the center. Typically, you’ll want to place the origin camera above the highest point in your scene. Constrain navigation to stay above the scene's base surface.

Use dark colors for code blocks Copy // Continued from above ... // Connect to the integrated mesh layer's done loading event. connect(m_integratedMeshLayer, &IntegratedMeshLayer::doneLoading, this, [this](Error e) { // Test if there is any error loading and display a message to the user. if (!e.isEmpty()) { qDebug() << e.errorType() << e.message() << " - " << e.additionalMessage(); return; } // Enable subsurface navigation. This allows you to look at the scene from below. m_scene->baseSurface()->setNavigationConstraint(NavigationConstraint::StayAbove); m_scene->baseSurface()->setOpacity(1.0f); // Get the point for the center of the full extent of the integrated mesh layer. const Point centerPoint = m_integratedMeshLayer->fullExtent().center(); // Start with the camera at the center of the mesh layer. m_originCamera = Camera(centerPoint.y(), centerPoint.x(), 250, 0, 90, 0); m_arcGISArView->setOriginCamera(m_originCamera); -

Set the translation factor to allow rapid traversal of the scene. The translation factor defines the relationship between physical device movement and virtual camera movement. To create a more immersive experience, set the space effect on the scene view to

Starsand the atmosphere effect toRealistic.Use dark colors for code blocks Copy // Continued from above ... // Set the translation factor to enable rapid movement through the scene. m_arcGISArView->setTranslationFactor(1000); // Enable atmosphere and space effects for a more immersive experience. m_sceneView->setSpaceEffect(SpaceEffect::Stars); m_sceneView->setAtmosphereEffect(AtmosphereEffect::Realistic); // Call the arcGISViewChanged event. emit arcGISArViewChanged(); // Call the sceneViewChanged event. emit sceneViewChanged(); });

Add world-scale AR to your app

A world-scale AR experience is defined by the following characteristics:

- The scene camera is positioned to precisely match the position and orientation of the device’s physical camera

- Scene content is placed in the context of the real world by matching the scene view’s virtual camera position and orientation to that of the physical device camera.

- Context aids, like the basemap, are hidden; the camera feed provides real-world context.

Some example use cases of world-scale AR include:

- Visualizing hidden infrastructure, like sewers, water mains, and telecom conduits.

- Maintaining context while performing rapid data collection for a survey.

- Visualizing a route line while navigating.

Configure content for world-scale AR

The goal of a world-scale AR experience is to create the illusion that your GIS content is physically present in the world around you. There are several requirements for content that will be used for world-scale AR that go beyond what is typically required for 2D mapping.

- Ensure that all data has an accurate elevation (or Z) value. For dynamically generated graphics (for example, route results) use an elevation surface to add elevation.

- Use an elevation source in your scene to ensure all content is placed accurately relative to the user.

- Don't use 3D symbology that closely matches the exact shape of the feature it represents. For example, do not use a generic tree model to represent tree features or a fire hydrant to represent fire hydrant features. Generic symbology won’t capture the unique geometry of actual real-world objects and will highlight minor inaccuracies in position.

- Consider how you present content that would otherwise be obscured in the real world, as the parallax effect can make that content appear to move unnaturally. For example, underground pipes will ‘float’ relative to the surface, even though they are at a fixed point underground. Have a plan to educate users, or consider adding visual guides, like lines drawn to link the hidden feature to the obscuring surface (for example, the ground).

- By default, scene content is rendered over a large distance. This can be problematic when you are trying to view a limited subset of nearby features (just the pipes in your building, not for the entire campus, for example). You can use the clipping distance to limit the area over which scene content renders.

Location tracking options for world-scale AR

There are a few strategies for determining the device’s position in the world and maintaining that position over time:

- Use the device’s location data source (for example, GPS) to acquire an initial position and make further position updates using ARKit and ARCore only.

- Use the location data source continuously.

With continuous updates, the origin camera is set every time the location data source provides a new update. With a one-time update, the origin camera is set only once.

There are benefits and drawbacks to each approach that you should consider when designing your AR experience:

- One-time update

- Advantage: ARKit/ARCore tracking is more precise than most location data sources.

- Advantage: Content stays convincingly pinned to its real-world position, with minimal drifting or jumping.

- Disadvantage: Error accumulates the further you venture from where you start the experience.

- Continuous update

- Advantage: Works over a larger area than ARKit or ARCore.

- Disadvantage: Visualized content will jump as you move through the world and the device’s location is updated (as infrequently as once per second rather than ARKit’s 60 times per second).

- Disadvantage: Because the origin camera is constantly being reset, you can’t use panning to manually correct position errors.

You don’t need to make a binary choice between approaches for your app. Your app can use continuous updates while the user moves through larger areas, then switch to a primarily ARKit or ARCore-driven experience when you need greater precision.

The choice of location strategy is specified with a call to start on the AR view control. To change the location update mode, stop tracking and then resume tracking with the desired mode.

Implement world-scale AR

-

Create an

ArcGISand add it to the view (typically the .qml file that defines the GUI components for your app).Ar View -

Now switch over to your apps .cpp file to make edits. Configure the

ArcGISwith a location data source. The location data source provides location information for the device. The AR scene view uses the location data source to place the virtual scene camera close to the location of the physical device’s camera.Ar View Use dark colors for code blocks Copy m_arcGISArView->setLocationDataSource(new Esri::ArcGISRuntime::Toolkit::LocationDataSource(m_arcGISArView)); -

Configure the scene for AR by setting the space and atmosphere effects and adding an elevation source, then display it.

Use dark colors for code blocks Copy // Define the Url to an ArcGIS tiled image service. const QUrl arcGISTiledImageServiceUrl = QUrl("https://elevation3d.arcgis.com/arcgis/rest/services/WorldElevation3D/Terrain3D/ImageServer"); // Create a new ArcGIS tiled elevation source from the Url. ArcGISTiledElevationSource* arcGISTiledElevationSource = new ArcGISTiledElevationSource(arcGISTiledImageServiceUrl, this); // Get the base surface from the scene. Surface* baseSurface = m_scene->baseSurface(); // Get the elevation source list model from the base surface. ElevationSourceListModel* elevationSourceListModel = baseSurface->elevationSources(); // Add the ArcGIS tiled elevation source to the elevation source list model. elevationSourceListModel->append(arcGISTiledElevationSource); // Define a background grid. BackgroundGrid grid; // Set the background grid's visibility to false. grid.setVisible(false); // Set the base surface's background grid. baseSurface->setBackgroundGrid(grid); // Set the base surface's navigational contatrait to NavigationConstraint::None. baseSurface->setNavigationConstraint(NavigationConstraint::None); // Set the scene view's atmosphere effect to AtmosphereEffect::None. m_sceneView->setAtmosphereEffect(AtmosphereEffect::None); // Set the scene view's space effect to SpaceEffect::Transparent. m_sceneView->setSpaceEffect(SpaceEffect::Transparent); -

Start tracking using one of two Location tracking options for world-scale AR, continuous or once only.

Use dark colors for code blocks Copy // Set the ArcGISArView's location tracking mode to LocationTrackingMode::Continuous. m_arcGISArView->setLocationTrackingMode(ArEnums::LocationTrackingMode::Continuous); // One-time mode //m_arcGISArView->setLocationTrackingMode(ArEnums::LocationTrackingMode::Initial); -

Provide a calibration UI to allow your users to correct heading, elevation, and location errors.

See Enable calibration for world-scale AR for more details.

Enable calibration for world-scale AR

World-scale AR depends on a close match between the positions and orientations of the device’s physical camera and the scene view’s virtual camera. Any error in the device’s position or orientation will degrade the experience. Consider each of the following key properties as common sources of error:

- Heading – Usually determined using a magnetometer (compass) on the device

- Elevation/Altitude (Z) – Usually determined using GPS/GNSS or a barometer

- Position (X,Y) – usually determined using GPS/GNSS, cell triangulation, or beacons

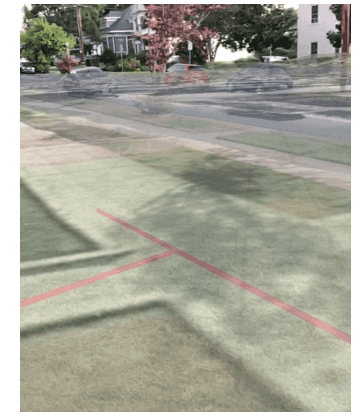

The following examples illustrate these errors by showing a semitransparent basemap for comparison with the ground truth provided by the camera:

| Orientation error | Elevation error | Position error |

|---|---|---|

|

|

|

Design a calibration workflow

There are many ways to calibrate position and heading. In most scenarios, you’ll need to provide one or more points of comparison between scene content and the real-world ground truth. Consider the following options for allowing the user to visually calibrate the position:

- Align the imagery on the basemap with the camera feed.

- Align a known calibration feature with its real-world equivalent (for example, a previously recorded tree feature).

- Define a start point and heading and direct the user.

Consider the following UI options for allowing the user to adjust the calibration:

- Display sliders for orientation and elevation adjustment.

- Use 'joystick' sliders, where the further from the center the slider moves, the faster the adjustment goes.

- Use an image placed in a known position in conjunction with ARCore/ARKit image detection to automatically determine the device's position.

Explicitly plan for calibration when designing your AR experiences. Consider how and where your users will use your app. Not all calibration workflows are appropriate for all locations or use cases.

Identify real-world and in-scene objects

Scene views have two methods for determining the location in a scene that corresponds to a point on the device's screen:

screen– ignores non-surface content, like 3D buildingsTo Base Surface screen– includes non-surface contentTo Location

ArcGIS has ar, which:

- Performs a hit test using ARKit/ARCore to find a real-world plane.

- Applies a transformation to determine the physical position of that plane relative to the known position of the device's camera.

- Returns the real-world position of the tapped plane.

You can use this to enable field data collection workflows where users tap to identify real-world objects in the camera feed as detected by ARKit/ARCore. The position of the tapped object will be more accurate than using the device's location, as you might with a typical field data collection process.

Manage vertical space in world-scale AR

Accurate positioning is particularly important to world-scale AR; even small errors can break the perception that the virtual content is anchored in the real world. Unlike 2D mapping, Z values are important. And unlike traditional 3D experiences, you need to know the position of the user’s device.

Be aware of the following common Z-value challenges that you’re likely to encounter while building AR experiences:

- Many kinds of Z values – and devices differ in how they represent altitude/elevation/Z values.

- Imprecise altitude – Altitude/Elevation is the least precise measurement offered by GPS/GNSS. In testing, we found devices reported elevations that were anywhere between 10 and 100 above or below the true value, even under ideal conditions.

Many kinds of Z values

Just as there are many ways to represent position using X and Y values, there are many ways to represent Z values. GPS devices tend to use two primary reference systems for altitude/elevation:

- WGS84 – Height Above Ellipsoid (HAE)

- Orthometric – Height Above Mean Sea Level (MSL)

The full depth of the differences between these two references is beyond the scope of this topic, but do keep in mind the following facts:

- Android devices return elevations in HAE, while iOS devices return altitude in MSL.

- It is not trivial to convert between HAE and MSL; MSL is based on a measurement of the Earth’s gravitational field. There are many models, and you may not know which model was used to when generating data.

- Esri’s world elevation service uses orthometric altitudes.

- The difference between MSL and HAE varies by location and can be on the order of tens of meters. For example, at Esri’s HQ in Redlands, California, the MSL altitude is about 30 meters higher than the HAE elevation.

It is important that you understand how your Z values are defined to ensure that data is placed correctly in the scene. For example, the Esri world elevation service uses MSL for its Z values. If you set the origin camera using an HAE Z value, you could be tens of meters off from the desired location.

To gain a deeper understanding of these issues, see ArcUser: Mean Sea Level, GPS, and the Geoid.

Visualize planes and features detected by ARCore and ARKit

Some workflows, like tapping to place a tabletop scene or collecting a feature, rely on ARKit/ARCore features that detect planes. Plane visualization is particularly useful for two common scenarios:

- Visualization provides feedback to users, so they know which surfaces the app has detected and can interact with

- Visualization is useful while developing and testing your app ARKit/ARCore can be configured to render planes.

// Set whether planes should be visible.

void ExploreScenesInFlyoverAR::showPlanes(bool visible)

{

if (visible)

m_arcGISArView->setPlaneColor(QColor(255, 0, 0, 10 ));

else

m_arcGISArView->setPlaneColor(QColor());

}